Introduction to Generalized Linear Models using Pynapple and NeMoS#

Authors: Camila Maura, Edoardo Balzani & Guillaume Viejo

In this notebook, we will use Pynapple and NeMoS packages (supported by the Flatiron Institute), to model spiking neural data using Generalized Linear Models (GLM). We will explain what GLMs are and which are their components, then use Pynapple and NeMoS python packages to preprocess real data from the Primary Visual Cortex (VISp) of mice, and use a GLM model to predict spiking neural data as a function of passive visual stimuli. We will also show how, if you have recordings from a large population of neurons simultaneously, you can build connections between the neurons into the GLM in the form of coupling filters.

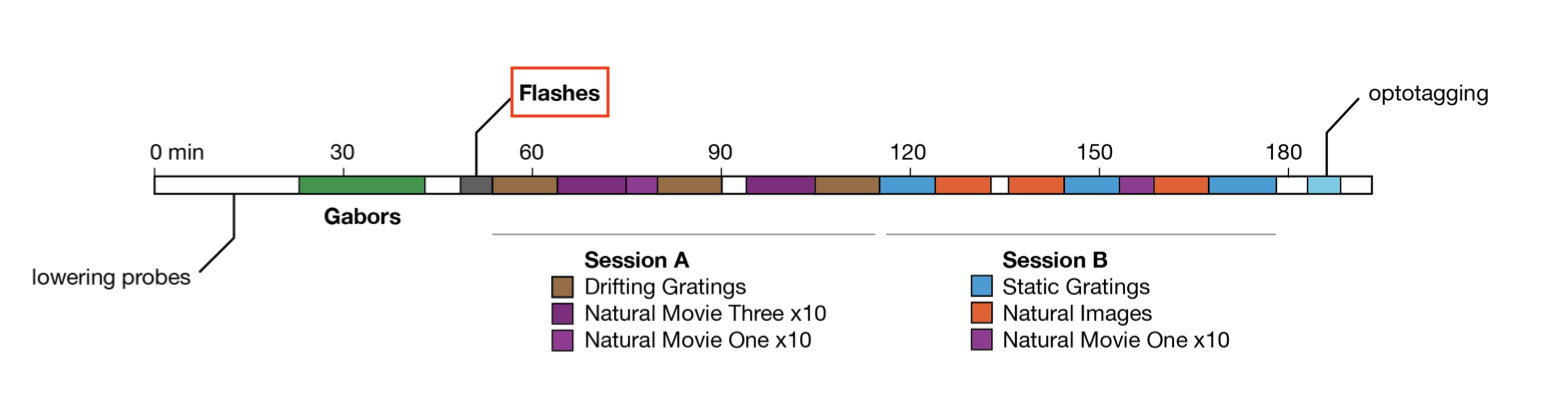

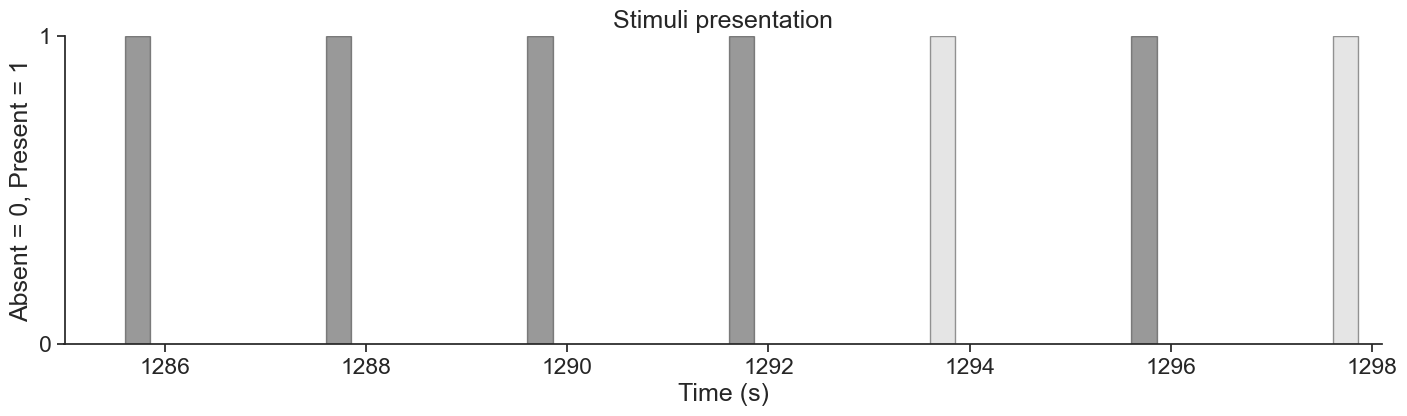

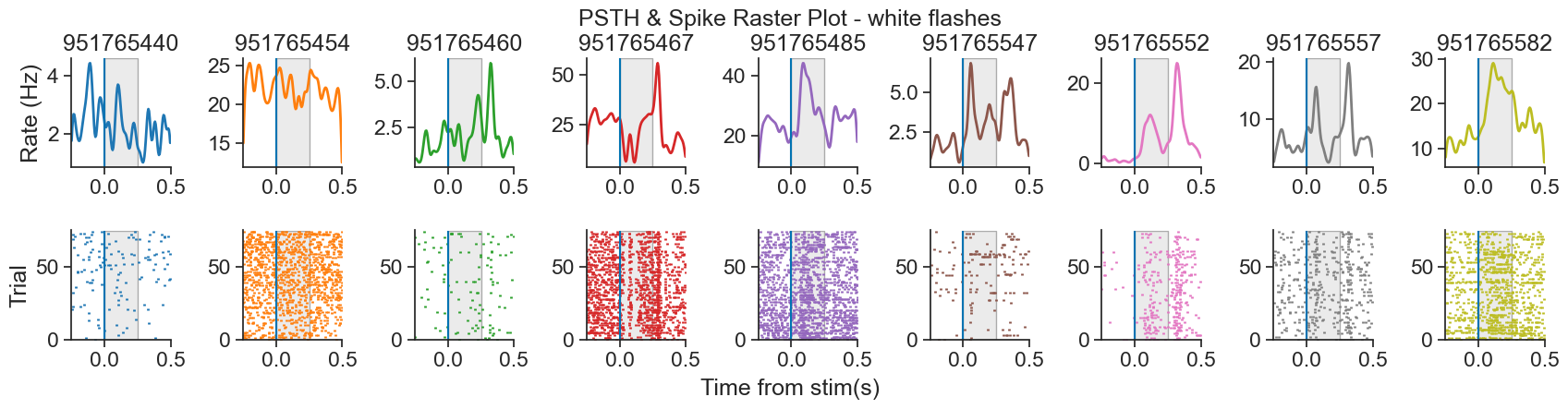

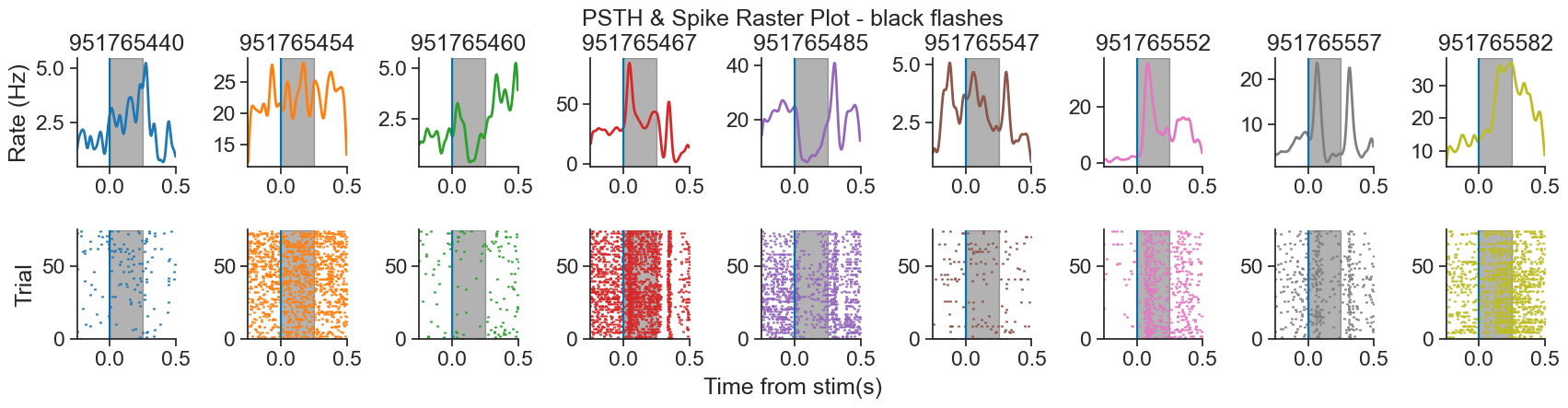

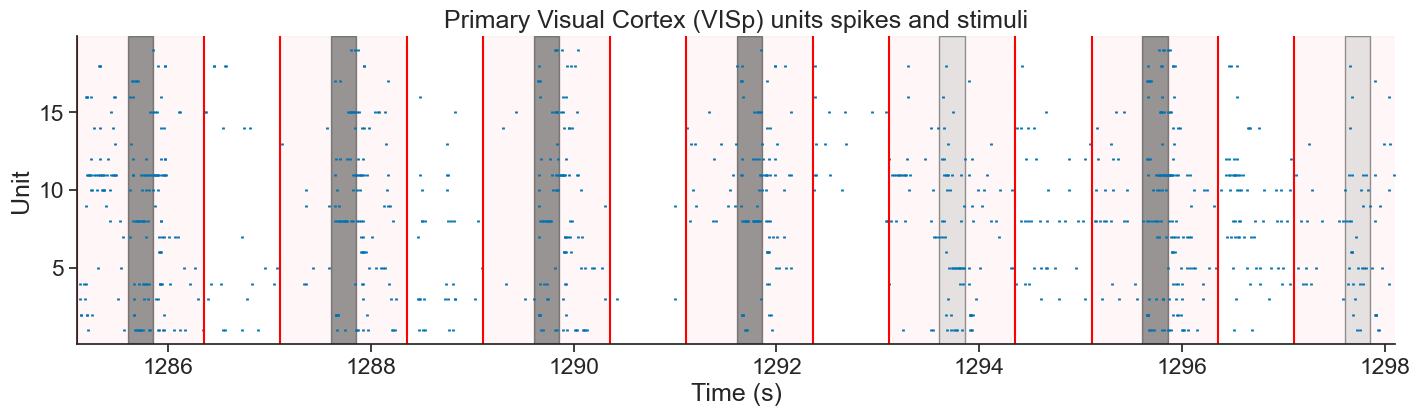

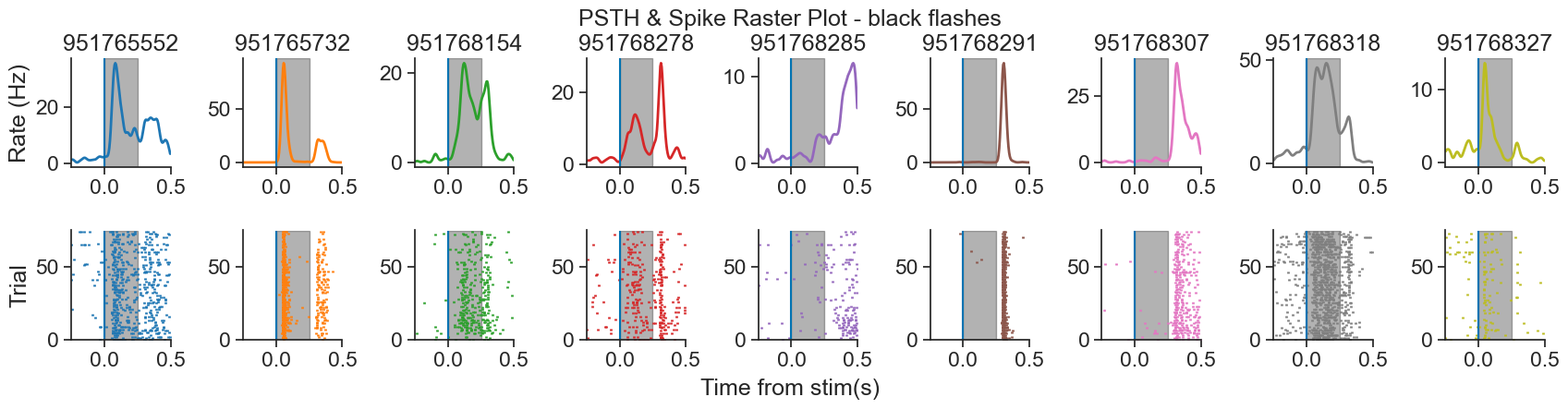

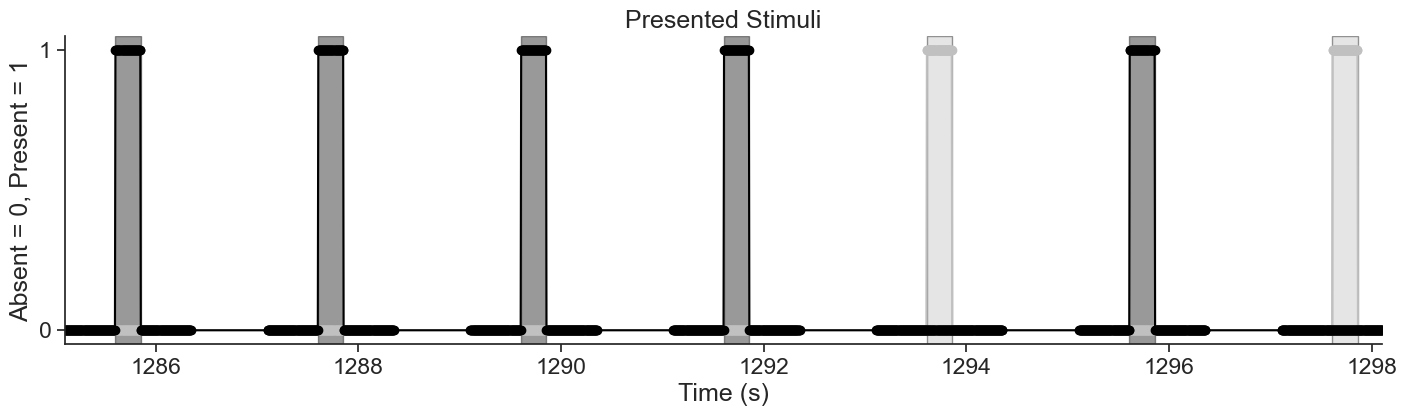

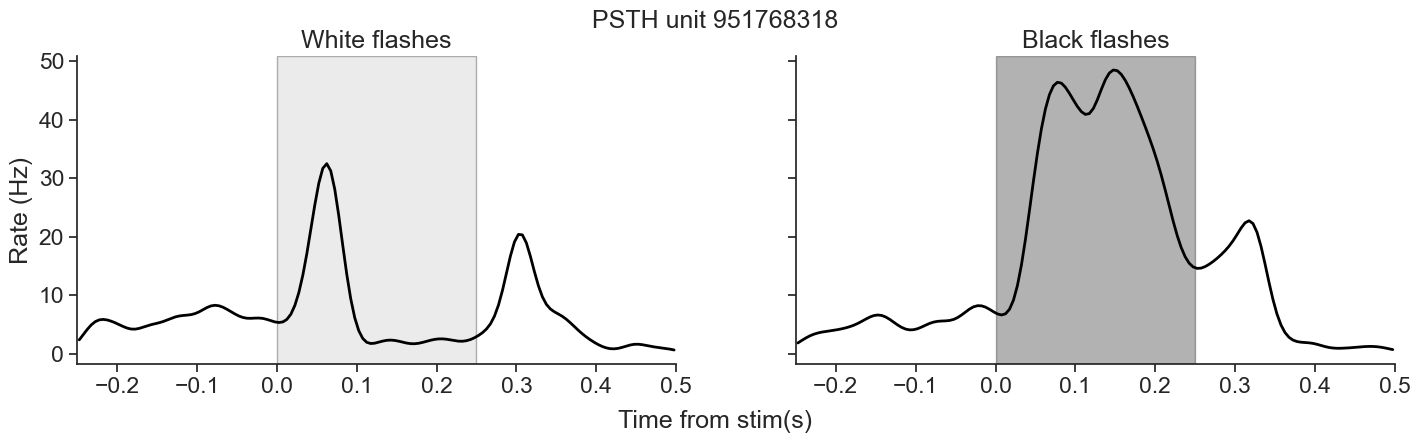

We will be analyzing data from the Visual Coding - Neuropixels dataset, published by the Allen Institute. This dataset uses extracellular electrophysiology probes to record spikes from multiple regions in the brain during passive visual stimulation. For simplicity, we will focus on the activity of neurons in the visual cortex (VISp) during passive exposure to full-field flashes of color either black (coded as “-1.0”) or white (coded as “1.0”) in a gray background.

We have three main goals in this notebook:

Introduce the key components of Generalized Linear Models (GLMs),

Demonstrate how to pre-process real experimental data recorded from mice using Pynapple, and

Use NeMoS to fit GLMs to that data and explore model-based insights.

By the end of this notebook, you should have a clearer understanding of the fundamental building blocks of GLMs, as well as how Pynapple and NeMoS can streamline the process of modeling and analyzing neural data, making it a much more accessible and efficient endeavor.

Background on GLMs#

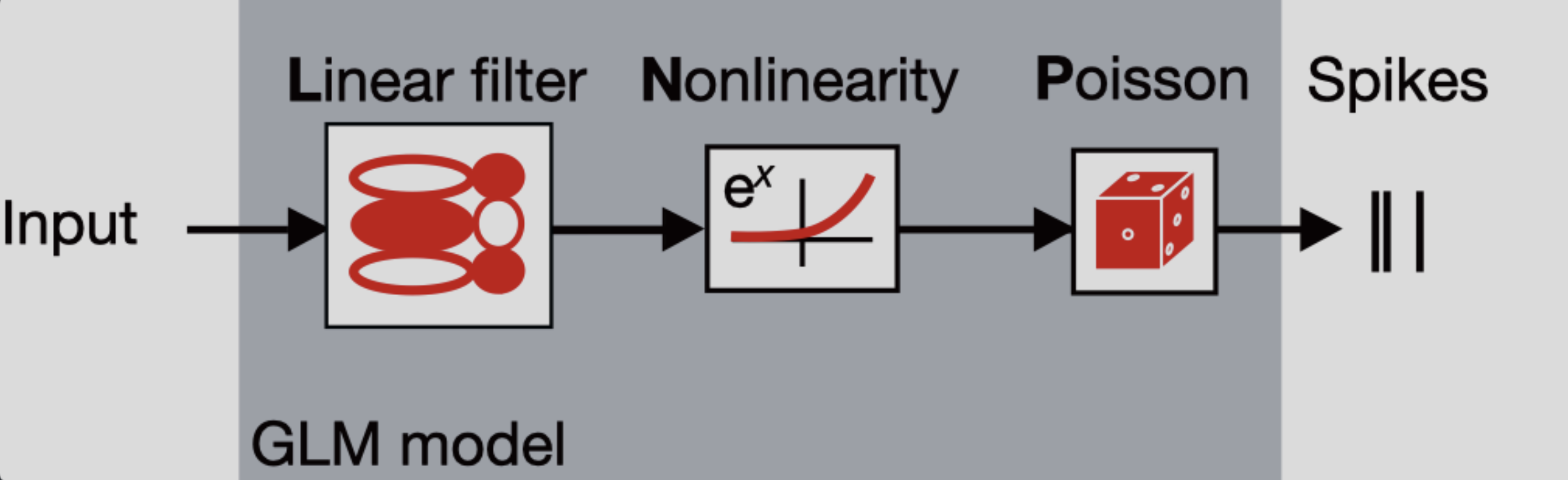

A GLM is a regression model which trains a filter to predict a value (output) as it relates to some other variable (or input). In the neuroscience context, we can use a particular type of GLM to predict spikes: the linear-nonlinear-Poisson (LNP) model. This type of model receives one or more inputs and then sends them through a linear “filter” or transformation, passes said transformation through a nonlinearity to get the firing rate and uses that firing rate as the mean of a Poisson distribution to generate spikes. We will go through each of these steps one by one:

Sends the inputs through a linear “filter” or transformation

The inputs (also known as “predictors” or “filters”) are first passed through a linear transformation:

\[ \begin{aligned} L(X) = WX + c \end{aligned} \]Where \(X\) is the input (in matrix form), \(W\) is a matrix and \(c\) is a vector (intercept).

\(L\) scales (makes bigger or smaller) or shifts (up or down) the input. When there is zero input, this is equivalent to changing the baseline rate of the neuron, which is how the intercept should be interpreted. So far, this is the same treatment of an ordinary linear regression.

Passes the transformation through a nonlinearity to get the firing rate.

The aim of a LNP model is to predict the firing rate of a neuron and use it to generate spikes, but if we were only to keep \(L(X)\) as it is, we would quickly notice that we could obtain negative values for firing rates, which makes no sense! This is what the nonlinearity part of the model handles: by passing the linear transformation through an exponential function, it is assured that the resulting firing rate will always be non-negative.

As such, the firing rate in a LNP model is defined:

\[ \begin{aligned} \lambda = exp(L(X)) \end{aligned} \]where \(\lambda\) is a vector containing the firing rates corresponding to each timepoint.

A note on nonlinearity

In NeMoS, the nonlinearity is kept fixed. We default to the exponential, but a small number of other choices, such as soft-plus, are allowed. The allowed choices guarantee both the non-negativity constraint described above, as well as convexity, i.e. a single optimal solution. In principle, one could choose a more complex nonlinearity, but convexity is not guaranteed in general.

What is the difference between a “link function” and the “nonlinearity”?

The link function states the relationship between the linear predictor and the mean of the distribution function. If \(g\) is a link function, \(L(⋅)\) is the linear predictor and \(\lambda\) the mean of the distribution function:

the “nonlinearity” is the name for the inverse of the link function \(g^{-1}(⋅)\).

Uses the firing rate as the mean of a Poisson distribution to generate spikes

In this type of GLM, each spike train is modeled as a sample from a Poisson distribution whose mean is the firing rate — that is, the output of the linear-nonlinear components of the model.

Spiking is a stochastic process. This means that a given firing rate can lead to many different possible spike trains. Since the model could generate an infinite number of spike train realizations, how do we evaluate how well it explains the single observed spike train? We do this by computing the log-likelihood: it quantifies how likely it is to observe the actual spike train given the predicted firing rate. If \( y(t) \) is the observed spike count and \( \lambda(t) \) is the predicted firing rate at time \( t \), then the log-likelihood at time \( t \):

\[ \log P(y(t) \mid \lambda(t)) = y(t)\log\lambda(t) - \lambda(t) -\log(y(t)!) \]However, the term \( -\log(y(t)!) \) does not depend on \( \lambda \), and therefore is constant with respect to the model. As a result, it is usually dropped during optimization, leaving us with the simplified log-likelihood:

\[ \log P(y(t) \mid \lambda(t)) = y(t) \log \lambda(t) - \lambda(t) \]This forms the loss function for LNPs. In practice, we aim to maximize this log-likelihood, which is equivalent to minimizing the negative log-likelihood — that is, finding the firing rate \(\lambda(t)\) that makes the observed spike train as likely as possible under the model.

Why using GLMs?

Why not just use linear regression? Because neural data breaks its key assumptions. Linear regression expects normally distributed data with constant variance, but spike counts are non-Gaussian. Even more problematic, neural variability isn’t constant: neurons that fire more frequently also tend to be more variable. This violates the homoscedasticity assumption that’s fundamental to linear regression, making GLMs a much more suitable framework for modeling neural activity.

GLMs are as easy to fit as linear regression! The objective function (negative log-likelihood) of GLMs with canonical link functions (such as log link which we are using here) is convex, which means there is one local minimum and no local maxima, ensuring convergence to the right answer.

More resources on GLMs

If you would like to learn more about GLMs, you can refer to:

NeMoS GLM tutorial: for a bit more detailed explanation of all the components of a GLM within the NeMoS framework, as well as some nice visualizations of all the steps of the input transformation!

Introduction to GLM - CCN software workshop by the Flatiron Institute: for a step by step example of using GLMs to fit the activity of a single neuron in VISp under current injection.

Neuromatch Academy GLM tutorial: for a bit more detailed explanation of the components of a GLM, slides and some coding exercises to ensure comprehension.

Jonathan Pillow’s COSYNE tutorial: for a longer tutorial of all of the components of a GLM, as well as different types of GLM besides LNP

Environment setup and library imports#

# Install requirements for the databook

try:

from databook_utils.dandi_utils import dandi_download_open

except:

!git clone https://github.com/AllenInstitute/openscope_databook.git

%cd openscope_databook

%pip install -e .

# Import libraries

import seaborn as sns

from scipy.stats import zscore

import numpy as np

import matplotlib.pyplot as plt

import pynapple as nap

import nemos as nmo

Show code cell source

# Imports for ease of visualization

import warnings

import matplotlib as mpl

warnings.filterwarnings("ignore")

from matplotlib.ticker import MaxNLocator

from scipy.stats import gaussian_kde

from matplotlib.patches import Patch

# Parameters for plotting

custom_params = {"axes.spines.right": False, "axes.spines.top": False}

sns.set_theme(style="ticks", palette="colorblind", font_scale=1.5, rc=custom_params)

Download data#

# Dataset information

dandiset_id = "000021"

dandi_filepath = "sub-726298249/sub-726298249_ses-754829445.nwb"

download_loc = "."

# Download the data using NeMoS

io = nmo.fetch.download_dandi_data(dandiset_id, dandi_filepath)

Now that we have downloaded the data, it is very simple to open the dataset with Pynapple

data = nap.NWBFile(io.read(), lazy_loading=False)

nwb = data.nwb

print(data)

754829445

┍━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┯━━━━━━━━━━━━━┑

│ Keys │ Type │

┝━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┿━━━━━━━━━━━━━┥

│ units │ TsGroup │

│ static_gratings_presentations │ IntervalSet │

│ spontaneous_presentations │ IntervalSet │

│ natural_scenes_presentations │ IntervalSet │

│ natural_movie_three_presentations │ IntervalSet │

│ natural_movie_one_presentations │ IntervalSet │

│ gabors_presentations │ IntervalSet │

│ flashes_presentations │ IntervalSet │

│ drifting_gratings_presentations │ IntervalSet │

│ timestamps │ Tsd │

│ running_wheel_rotation │ Tsd │

│ running_speed_end_times │ Tsd │

│ running_speed │ Tsd │

│ raw_gaze_mapping/screen_coordinates_spherical │ TsdFrame │

│ raw_gaze_mapping/screen_coordinates │ TsdFrame │

│ raw_gaze_mapping/pupil_area │ Tsd │

│ raw_gaze_mapping/eye_area │ Tsd │

│ optogenetic_stimulation │ IntervalSet │

│ optotagging │ Tsd │

│ filtered_gaze_mapping/screen_coordinates_spherical │ TsdFrame │

│ filtered_gaze_mapping/screen_coordinates │ TsdFrame │

│ filtered_gaze_mapping/pupil_area │ Tsd │

│ filtered_gaze_mapping/eye_area │ Tsd │

│ running_wheel_supply_voltage │ Tsd │

│ running_wheel_signal_voltage │ Tsd │

│ raw_running_wheel_rotation │ Tsd │

┕━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┷━━━━━━━━━━━━━┙

Pynapple objects

When printing data, we can see four type of Pynapple objects:

TsGroup: Dictionary-like object to group objects with different timestampsIntervalSet: A class representing a (irregular) set of time intervals in elapsed time, with relative operationsTsd: 1-dimensional container for neurophysiological time series - provides standardized time representation, plus various functions for manipulating times series.TsdFrame: Column-based container for neurophysiological time series

To learn more, please refer to the Pynapple documentation

Extraction, preprocessing and stimuli revision#

Extracting spiking data#

We have a lot of information in data, but we are interested in the units.

(it might take a while the first time that you run this - it’s okay! the dataset is quite big)

units = data["units"]

# See the columns

print(f"columns : {units.metadata_columns}")

# See the dataset

print(units)

columns : ['rate', 'spread', 'velocity_below', 'silhouette_score', 'firing_rate', 'd_prime', 'nn_hit_rate', 'waveform_duration', 'amplitude', 'cluster_id', 'snr', 'local_index', 'peak_channel_id', 'PT_ratio', 'presence_ratio', 'max_drift', 'cumulative_drift', 'repolarization_slope', 'waveform_halfwidth', 'amplitude_cutoff', 'nn_miss_rate', 'quality', 'velocity_above', 'isolation_distance', 'l_ratio', 'recovery_slope', 'isi_violations']

Index rate spread velocity_below silhouette_score firing_rate d_prime nn_hit_rate waveform_duration amplitude cluster_id snr local_index peak_channel_id PT_ratio presence_ratio max_drift cumulative_drift repolarization_slope waveform_halfwidth amplitude_cutoff nn_miss_rate quality velocity_above isolation_distance l_ratio recovery_slope isi_violations

--------- -------- -------- ---------------- ------------------ ------------- --------- ------------- ------------------- ----------- ------------ ----- ------------- ----------------- ---------- ---------------- ----------- ------------------ ---------------------- -------------------- ------------------ -------------- --------- ---------------- -------------------- --------- ---------------- ----------------

951763702 2.38003 30 nan nan 2.38 4.77 0.98 1.52 78.67 0 2.09 0 850135036 1.38 0.98 23.02 425.42 0.1 0.73 0.5 0 good 0 63.68 0 -0 0.44

951763707 0.01147 80 nan 0.03 0.01 3.48 0 1.44 105.93 1 1.54 1 850135036 3.02 0.5 nan 0 0.05 2.18 0.5 0 noise -0.17 12.27 0.01 -0.39 0

951763711 3.1503 50 nan 0.17 3.15 6.08 1 1.04 189.17 2 1.44 2 850135038 2.35 0.99 14.29 328.21 0.12 0.91 0.03 0 good -0.27 63.87 0 -0.01 0.06

951763715 6.53 40 nan 0.12 6.53 5.04 0.99 1.28 191.85 3 1.43 3 850135038 2.05 0.99 27.45 369.58 0.18 0.63 0.01 0 good 0.62 47.54 0 -0.02 0.64

951763720 2.00296 40 0 0.2 2 6.45 0.99 0.27 316.89 4 4.28 4 850135044 0.63 0.99 39.21 214.71 1.37 0.15 0 0 good 0.34 59.93 0 -0.29 0.55

951763724 8.66233 60 -7.55 0.22 8.66 3.1 0.86 0.37 133.85 5 2.22 5 850135044 4.04 0.99 81.75 1245.94 0.37 0.23 0.2 0.01 noise 1.61 41.19 0.03 -0.04 0.55

951763729 11.134 30 -0.69 0.01 11.13 4.61 0.98 0.32 153.87 6 2.45 6 850135044 3.56 0.85 36.45 484.22 0.62 0.16 0.42 0 noise 0.69 91.43 0 -0.09 0.31

951763733 23.8915 60 -0.27 0.19 23.89 3.2 0.74 0.38 329.91 7 1.36 7 850135050 0.19 0.99 47.53 107.39 1.03 0.25 0 0.05 good 1.03 94.32 0.04 -0.23 0.11

951763737 1.01848 60 -0.27 0.12 1.02 3.61 0.32 0.51 251.6 8 3.22 8 850135050 0.93 0.63 36.8 86.37 0.31 0.29 0 0 noise 2.06 34.53 0.01 -0.14 8.63

951763741 11.8133 30 nan 0.15 11.81 1.99 0.25 1.21 110.32 9 0.43 9 850135050 0.4 0.93 88.68 774.76 0.01 1.29 0.37 0.05 noise 11.33 34.23 0.09 -0.02 0.06

951763745 13.4006 50 -9.47 0.02 13.4 1.8 0.24 1.15 95.07 10 0.33 10 850135050 0.43 0.99 98.87 894.51 0.01 1.33 0.35 0.05 noise 14.42 35.34 0.1 -0.02 0.06

951763749 18.2252 60 -8.45 0.01 18.23 1.85 0.35 1.54 96.28 11 0.32 11 850135050 0.52 0.99 128.7 1059.25 0.22 nan 0.28 0.06 noise 12.02 39.32 0.09 -0.01 0.17

951763753 18.9817 80 9.17 0.1 18.98 2.67 0.89 1.03 71.72 12 0.22 12 850135050 0.46 0.99 53.35 547.75 0.04 nan 0.25 0.01 noise -0.86 92.67 0.01 nan 0.11

951763758 13.2587 20 nan 0.04 13.26 4.44 0.95 1.11 147.66 13 0.68 13 850135050 0.49 0.54 29.68 255.01 0.02 nan 0.09 0 noise -25.41 62.62 0.01 nan 0.27

951763762 17.3762 40 8.93 0.01 17.38 1.74 0.32 2.01 105.62 14 0.33 14 850135050 0.54 0.91 121.76 921.06 0.1 nan 0.09 0.06 noise 11.68 37.33 0.1 -1.31 0.07

951763766 12.0932 60 -0.34 0.03 12.09 3.12 0.57 0.45 259.12 15 0.96 15 850135050 0.06 0.99 49.6 189.29 0.61 0.34 0 0.02 noise 1.37 42.2 0.05 -0.17 0.85

951763770 4.00705 120 -2.35 0.11 4.01 3.16 0.48 0.71 117.65 16 1.09 16 850135064 0.77 0.98 37.16 253.33 0.34 0.08 0.01 0.01 noise 0.1 37.27 0.03 -0.13 0.03

951763774 5.77131 120 -0.41 0.04 5.77 4.46 0.76 0.32 107.42 17 2.54 17 850135052 0.51 0.91 41.06 172.15 0.41 0.18 0.03 0.01 good -0.46 57.82 0.01 -0.09 0.04

951763778 1.15881 120 -0.48 -0.05 1.16 3.27 0.1 0.32 87.23 18 2.02 18 850135052 0.53 0.88 34.17 152.12 0.34 0.18 0.05 0 noise -1.77 26.81 0.03 -0.08 0.29

951763782 14.6012 50 -0.34 0.18 14.6 4.91 0.98 1.3 282.59 19 2.51 19 850135058 4.98 0.99 27.03 92.57 0.19 nan 0 0 good -0.69 63.43 0 -0.01 0

951763786 7.50032 50 -0.34 0.12 7.5 4.91 0.96 1.41 223.21 20 1.89 20 850135058 34.12 0.99 33.58 103.36 0.1 nan 0 0 good 0 58.26 0.01 -0.05 0.02

951763790 0.50676 70 -0.07 nan 0.51 4.22 0.33 0.48 204.91 21 1.88 21 850135058 0.95 0.66 7.77 24.52 0.67 0.18 0.06 0 good 0.27 36.67 0 -0.13 0.6

951763794 13.4125 40 -0.69 0.08 13.41 3.93 0.91 0.23 153.74 22 2.27 22 850135060 0.36 0.99 16.81 234.41 0.65 0.07 0.28 0 noise 0.34 59.03 0.01 -0.07 0.06

951763798 3.45524 90 -0.2 0.08 3.46 3.82 0.65 0.54 145.9 23 2.16 23 850135060 0.79 0.99 22.86 245.82 0.44 0.21 0.5 0 good -0.14 38.5 0.02 -0.05 0.16

951763802 14.2516 60 -0.34 0.17 14.25 3.97 0.77 0.62 229.05 24 3.05 24 850135062 0.43 0.99 41.46 115.68 0.63 0.25 0.06 0.01 good 0.41 55.09 0.02 -0.11 0.17

951763806 2.36639 70 -0.21 0.05 2.37 2.59 0.24 0.54 192.35 25 1.8 25 850135072 0.45 0.99 17.38 311.82 0.54 0.3 0.05 0 good 0 26.58 0.07 -0.09 0.19

951763808 10.4327 60 -0.27 0.02 10.43 2.9 0.53 0.36 300.37 26 1.06 26 850135050 0.08 0.99 49.98 153.06 0.97 0.27 0 0.02 good 1.03 37.43 0.05 -0.21 0.19

951763811 0.91494 50 -0.34 -0.01 0.91 4.15 0.26 0.54 223.67 27 2.99 27 850135062 0.37 0.94 10.11 49.54 0.73 0.22 0.02 0 noise 0.34 33.34 0.01 -0.08 0.74

951763815 8.95715 70 -0.41 -0 8.96 3.03 0.64 0.52 195.89 28 2.64 28 850135062 0.49 0.99 20.6 130.53 0.6 0.22 0.22 0.01 noise 0.48 49.55 0.02 -0.11 0.2

951763819 0.69669 70 -0.21 0.04 0.7 3.2 0.3 0.56 182.87 29 2.46 29 850135062 0.46 0.75 12.96 67.73 0.49 0.25 0.2 0 good 0.41 25.92 0.02 -0.08 0.96

951763823 15.7534 80 0.27 0.08 15.75 3.26 0.95 1.5 166.02 30 1.27 30 850135064 1.21 0.99 31.47 102.05 0.07 0.84 0.16 0 good 0.27 65.17 0.01 -0.02 0.03

951763828 20.426 50 0 0.17 20.43 7.35 1 0.36 387.75 31 4.76 31 850135066 0.9 0.99 47.82 104.69 1.47 0.19 0 0 good 0.34 129.98 0 -0.28 0

951763833 5.54128 50 -0.34 0.14 5.54 2.73 0.83 1.41 253.18 32 1.91 32 850135068 10.55 0.99 44.35 131.02 0.15 nan 0.5 0 good 0 49.27 0.01 -0.03 0.09

951763837 10.3832 40 -0.69 0.07 10.38 3.26 0.92 1.4 332.94 33 2.45 33 850135068 2.74 0.99 43.84 84.73 0.18 nan 0 0 good -0.34 50.35 0.02 -0.02 0.01

951763841 18.6182 70 0 0.09 18.62 2.77 0.91 1.04 253.41 34 1.85 34 850135068 7.92 0.99 47.61 117.71 0.15 nan 0.06 0.01 good -0.07 53.17 0.03 -0 0.03

951763845 12.7977 50 -1.37 0.07 12.8 4.31 0.96 1.26 207.72 35 1.64 35 850135068 11.52 0.99 37.5 94.2 0.13 nan 0.02 0.01 good 0 69.14 0 -0.01 0.03

951763849 11.4132 80 0.27 0.21 11.41 5.34 0.94 0.51 183.93 36 2.58 36 850135070 0.33 0.99 34 110.88 0.54 0.25 0 0 good 0.76 71.26 0 -0.09 0.04

951763854 2.52108 90 0.98 0.11 2.52 3.89 0.83 0.47 101.37 37 1.4 37 850135070 0.22 0.99 43.12 379.27 0.27 0.25 0.44 0 noise 1.17 48.78 0.01 -0.04 0.02

951763859 17.1477 60 -0.34 0.11 17.15 3.16 0.62 0.52 317.22 38 3.53 38 850135072 0.39 0.99 36.53 98.08 0.97 0.22 0.01 0.02 good 0.27 48.73 0.04 -0.18 0.06

951763863 0.59377 70 -0.48 -0.01 0.59 1.96 0.07 0.55 231.81 40 2.5 39 850135072 0.43 0.98 38.38 297.14 0.69 0.23 0.06 0 good 0.21 25.39 0.04 -0.12 0.22

951763867 0.03658 70 -0.21 nan 0.04 2.72 0 0.54 281.53 41 3.04 40 850135072 0.48 0.71 8.83 4.12 0.86 0.25 0.49 0 good 0.21 24.11 0.02 -0.19 0

951763874 0.01312 80 0 0.02 0.01 3.51 nan 0.52 227.62 42 2.19 41 850135072 0.44 0.49 nan 0 0.64 0.25 0.41 0 noise 0.49 27.82 0 -0.12 0

951763880 0.02273 170 -2.96 nan 0.02 5.65 0.17 0.33 14.44 43 0.17 42 850135478 2.71 0.47 12.28 37.9 0.05 0.73 0.4 0 noise 0.05 66.55 0 -0.02 0

951763887 5.85025 60 -0.69 0.12 5.85 3.98 0.97 1.46 175.92 44 2.17 43 850135076 1.2 0.99 13.59 157.41 0.08 0.76 0.5 0 good -0.14 70.07 0 -0.06 0.13

951763893 5.0215 100 -0.2 0.07 5.02 3.25 0.73 1.51 127.55 45 1.68 44 850135078 1.25 0.99 31.06 277.75 0.05 0.77 0.5 0 good 0.62 45.59 0.01 -0.03 0.11

951763901 6.27197 90 0.21 0.16 6.27 3.44 0.9 1.5 162.24 46 1.93 45 850135086 2.53 0.99 24.48 177.91 0.07 nan 0.04 0 good 2.34 60.28 0.01 nan 0.05

951763907 9.34383 80 0 0.11 9.34 3.11 0.97 0.54 211.34 47 3.05 46 850135082 0.61 0.99 27.14 116.85 0.62 0.23 0.04 0 good 0.48 67.6 0 -0.12 0.06

951763914 15.789 70 -0.21 0.14 15.79 6.13 0.99 0.55 244.26 48 1.99 47 850135084 0.6 0.99 32.78 87.27 0.66 0.19 0 0 good 0 113.55 0 -0.12 0

951763920 4.00488 110 0.04 0.1 4 2.75 0.77 0.59 158.2 49 1.42 48 850135084 0.56 0.99 16.87 275.2 0.36 0.33 0.5 0 good 0.16 50.12 0.01 -0.07 0.21

951763928 5.38287 60 0 0.36 5.38 5.01 0.96 0.51 684.2 50 4.44 49 850135084 0.81 0.99 49.61 98.27 1.97 0.26 0 0 good 0.41 61.92 0 -0.44 0

951763934 3.04221 60 0.34 0.15 3.04 2.97 0.88 0.96 223.44 51 1.14 50 850135084 0.34 0.99 43.2 212.11 0.58 0.3 0.5 0 good 0.21 46.77 0.01 -0.03 0.26

951763941 0.00816 50 -0.34 nan 0.01 4.4 nan 0.48 691.84 52 2.74 51 850135084 0.99 0.43 nan 0 1.83 0.34 0.5 0 noise 0.69 78.63 0 -0.7 0

951763947 1.57897 50 0 0.17 1.58 3.04 0.99 2.05 869.17 53 5.31 52 850135084 1.05 0.98 64.34 801.87 0.98 0.43 0.05 0 noise 0.34 67.51 0 nan 0.12

951763954 11.8275 60 -0.14 0.2 11.83 5.34 0.98 0.52 407.73 54 4.67 53 850135086 0.94 0.99 35.17 67.31 1.21 0.18 0 0 good 0.34 70.21 0 -0.17 0

951763961 8.62689 60 -0.14 0.06 8.63 2.82 0.95 1.5 234.27 55 2.82 54 850135086 1.65 0.99 28.47 109.43 0.12 1.47 0 0 good 0.69 70.55 0 -0.16 0.08

951763968 8.3856 70 0.76 0.19 8.39 4.54 0.99 1.29 245.66 56 3.3 55 850135088 2.21 0.99 34.87 106.33 0.13 0.96 0 0 good 0.69 79.37 0 -0.01 0.01

951763975 14.9898 70 0.48 0.13 14.99 5.46 0.98 1.09 232.1 57 2.92 56 850135090 1.02 0.99 35.15 74.72 0.13 0.67 0 0 good 0.62 78.92 0.01 0 0

951763982 12.1488 60 -0.69 0.18 12.15 3.6 0.79 0.56 145.48 59 2.5 57 850135092 0.43 0.99 19.53 80.96 0.39 0.32 0.13 0.02 good -1.03 80.68 0.01 -0.08 0.08

951763988 7.26286 60 -0.69 0.27 7.26 5.52 0.99 0.54 294.45 60 4.54 58 850135092 0.38 0.99 25.28 54.14 0.92 0.21 0 0 good 0.41 77.64 0 -0.16 0

951763994 5.46647 70 0.55 0.02 5.47 3 0.54 0.54 135.68 61 2.35 59 850135092 0.38 0.99 34.14 247.78 0.35 0.32 0.33 0.01 noise 1.03 44.64 0.02 -0.08 0.09

951764001 11.7287 80 0.14 0.17 11.73 4.51 0.97 1.11 166.75 62 2.57 60 850135094 1.25 0.99 17.56 115.6 0.09 0.77 0.23 0 good 0.62 84.56 0 0 0.11

951764008 0.15738 110 0.41 0.07 0.16 2.73 0 0.59 179.69 63 2.35 61 850135094 0.89 0.93 29.74 189.08 0.44 0.27 0.1 0 good 0.39 18.86 0.04 -0.09 0

951764014 2.82003 140 0.03 -0.01 2.82 2.77 0.25 0.98 42.76 64 0.38 62 850135122 3.25 0.99 81.96 945.36 0.03 nan 0.5 0.01 noise -0.01 30.14 0.03 nan 0.09

951764022 11.1967 70 -0.34 0.15 11.2 3.83 0.93 1.21 149.13 65 1.36 63 850135096 7.52 0.99 29.51 100.64 0.06 nan 0.08 0.01 good 0.34 67 0 -0.01 0.04

951764029 5.63883 80 -0.07 0.06 5.64 3.02 0.74 1.39 135.8 66 1.78 64 850135090 1.5 0.99 28.49 177 0.08 0.85 0.12 0 good 0.34 48.58 0.01 -0.01 0.09

951764035 11.5555 50 -0.34 0.15 11.56 3.75 0.94 0.59 147.52 67 1.94 65 850135100 0.95 0.99 33.98 112.86 0.36 0.27 0.12 0 good 1.37 64 0.01 -0.07 0.09

951764042 12.2763 50 -0.76 0.27 12.28 4.78 1 0.54 336.72 68 4.26 66 850135100 0.75 0.99 26.05 81.89 0.96 0.32 0 0 good 0 95.55 0 -0.18 0

951764049 3.296 40 -1.03 0.13 3.3 2.55 0.72 1.37 150.65 69 1.85 67 850135100 1.04 0.99 33.97 270.9 0.07 0.77 0.06 0 good 0 41.87 0.02 -0.03 0.23

951764055 7.28983 70 -0.27 0.22 7.29 4.45 0.87 1.22 250.02 70 2.46 68 850135102 1.58 0.99 27.69 85.71 0.14 0.84 0 0 good -0.69 62.86 0 -0.01 0.01

951764063 7.33901 50 -0.69 0.24 7.34 5.65 0.97 1.18 273.47 71 2.63 69 850135102 1.4 0.99 21.86 74.28 0.17 0.82 0 0 good 1.03 64.91 0 -0 0

951764070 10.6127 80 -1.44 0.13 10.61 3.09 0.97 1.39 157.74 72 1.59 70 850135102 2.91 0.99 25.1 122.97 0.08 1.07 0.16 0 good 0.21 76.71 0 -0.01 0.03

951764076 3.39117 80 -0.1 0.05 3.39 2.28 0.48 1.48 193.47 73 1.68 71 850135102 2.61 0.99 26.58 231.56 0.09 1.04 0.5 0.01 good -0.34 35.86 0.03 -0.1 0.14

951764083 10.1095 70 -0.34 0.22 10.11 5.39 0.98 1.57 221.12 74 2.27 72 850135102 1.94 0.99 30.58 103.97 0.13 1.41 0 0 good 0.62 76.5 0 -0.14 0

951764090 14.326 30 -0.69 0.14 14.33 6.02 1 0.55 362.63 75 4.38 73 850135104 0.63 0.99 29.14 58.24 0.99 0.27 0 0 good nan 90.99 0 -0.2 0

951764099 11.0116 60 -0.14 0.07 11.01 3.51 0.96 0.51 167.25 76 2.15 74 850135108 0.81 0.99 29.02 109.5 0.5 0.23 0.1 0 good 0.69 66.59 0 -0.1 0.02

951764106 12.9879 80 -0.69 0.11 12.99 2.44 0.95 0.58 225.94 77 2.86 75 850135108 0.58 0.99 24.5 86.23 0.54 0.29 0.04 0 good 0.27 64.34 0 -0.08 0.03

951764112 8.86983 50 -2.06 0.1 8.87 4.32 0.95 0.51 256.97 78 3.1 76 850135108 0.57 0.99 16.09 74.1 0.79 0.19 0 0 good 0.62 65.45 0 -0.14 0.04

951764118 4.8696 50 -0.69 0.14 4.87 2.12 0.89 0.47 297.7 79 3.39 77 850135112 0.73 0.97 32.92 408.72 1.02 0.16 0 0 good 0.69 43.51 0.02 -0.13 0.11

951764125 4.77381 70 -0.21 0.12 4.77 3.1 0.54 0.54 150.03 80 2.52 78 850135110 0.7 0.99 15.09 191.94 0.43 0.26 0.5 0.01 good -0.21 42.81 0.02 -0.08 0.25

951764132 11.0504 70 -0.76 0.1 11.05 3.17 0.92 0.52 157.43 81 2.73 79 850135110 0.7 0.99 32.49 98.35 0.46 0.21 0.01 0 good 0.21 58.85 0.01 -0.07 0.02

951764139 6.14549 80 0 0 6.15 3.69 0.74 0.51 171.94 82 2.81 80 850135110 0.69 0.99 15.5 169.7 0.5 0.25 0.12 0.01 good -0.48 56.27 0.01 -0.1 0.12

951764147 7.73138 70 -0.48 0.08 7.73 3.6 0.89 1.46 154.24 83 1.99 81 850135112 1.35 0.99 19.71 109.97 0.11 0.69 0.1 0 good 0.07 59.23 0.01 -0.01 0.07

951764155 7.7847 60 -1.03 0.18 7.78 6.5 0.99 0.56 392.87 84 4.66 82 850135112 0.66 0.99 30.35 68.68 1.19 0.23 0 0 good 0.48 80.58 0 -0.2 0

951764162 3.00635 90 -0.07 0.1 3.01 2.71 0.64 0.66 136.1 86 2.06 83 850135114 0.29 0.99 16.32 212.82 0.36 0.3 0.5 0 good 0.14 36.71 0.02 -0.06 0.19

951764169 0.58705 80 -0.69 0.13 0.59 3.69 0.8 1.44 141.25 87 1.77 84 850135112 1.27 0.99 25.87 412.24 0.07 0.8 0.26 0 good -0.62 45.93 0 -0.08 0.22

951764175 11.2825 50 0 0.31 11.28 9.6 1 0.51 477.19 88 5.8 85 850135120 0.59 0.99 29.87 110.84 1.62 0.19 0 0 good 0.34 160.48 0 -0.25 0

951764182 3.69808 70 -1.03 0.07 3.7 3.87 0.9 0.55 121.15 89 1.84 86 850135116 0.51 0.99 29.3 193.6 0.35 0.19 0.5 0 good 0.14 60.78 0 -0.04 0.39

951764190 8.31926 60 0 0.05 8.32 5.17 0.98 0.63 165.47 90 2.33 87 850135116 0.66 0.99 27.66 87.8 0.36 0.26 0 0 good 1.37 76.42 0 -0.08 0.01

951764196 2.44906 80 -0.55 0.1 2.45 2.61 0.94 0.54 173.42 91 1.83 88 850135120 0.69 0.99 19.9 308.01 0.56 0.16 0.45 0 good 0.89 63.26 0 -0.07 0.3

951764203 14.0843 50 -0.34 0.39 14.08 10.14 1 0.54 597.12 92 5.17 89 850135122 0.74 0.99 30.94 73.26 1.8 0.21 0 0 good 0 179.49 0 -0.31 0

951764210 5.32293 120 -1.23 0.08 5.32 2.41 0.52 1.3 84.48 94 0.88 90 850135124 1.36 0.99 43.03 341.19 0.04 1.21 0.48 0.02 good 3.35 45.81 0.03 nan 0.21

951764216 19.1162 70 -0.27 0.14 19.12 4.23 0.99 0.49 215.29 95 2.71 91 850135124 0.64 0.99 24.36 85.83 0.69 0.26 0 0.01 good 0.76 105 0 -0.12 0.01

951764224 3.83655 80 -0.69 0.08 3.84 2.57 0.57 0.66 116.01 96 1.18 92 850135124 0.89 0.99 30.09 354.25 0.24 0.29 0.48 0.01 good 0.37 39.91 0.04 -0.08 0.31

951764232 5.33502 80 -1.03 0.17 5.34 5.12 0.94 1.22 189.07 97 1.89 93 850135118 2.04 0.99 29.81 222.13 0.09 1.06 0.04 0 good -0.24 69.38 0 -0.01 0.31

951764239 3.92841 60 -0.34 0.14 3.93 3.6 0.94 0.45 164.54 98 1.46 94 850135126 0.01 0.98 9.06 146.4 0.55 0.26 0.13 0 good 0.55 74.03 0 -0.07 0.12

951764246 5.55037 60 -0.69 0.32 5.55 8.69 1 0.45 443.82 99 4.3 95 850135126 0.31 0.99 21.27 56.42 1.33 0.23 0 0 good 0.41 129.84 0 -0.24 0

951764250 6.62693 50 -0.69 0.31 6.63 6.57 0.99 0.49 418.96 100 4.28 96 850135128 0.71 0.99 27.29 75 1.4 0.15 0 0 good 0.34 85.05 0 -0.19 0

951764254 0.49198 140 -0.26 0.17 0.49 2.12 0.19 0.62 111.44 102 1.18 97 850135130 0.06 0.8 20.49 154.47 0.29 0.41 0.5 0 noise -0.32 28.39 0.04 -0.03 1.76

951764259 7.92751 60 0.27 0.16 7.93 5 0.96 0.52 164.39 103 2.65 98 850135132 0.3 0.99 15.32 97.82 0.51 0.25 0.03 0 good -0.34 73.17 0 -0.07 0.26

951764263 5.8641 170 -1.13 0.02 5.86 2.31 0.58 1.32 45.24 104 0.34 99 850135128 1.58 0.86 39.06 291.98 0.02 nan 0.38 0.01 good 0.47 47.93 0.02 nan 1.1

951764268 4.06389 60 -0.62 0.32 4.06 4.83 0.99 0.47 338.2 105 3.64 100 850135134 0.95 0.99 31.05 89.91 1.07 0.16 0 0 good 0 87.29 0 -0.17 0

951764272 14.842 70 -0.69 0.08 14.84 3.9 0.96 1.57 239.46 106 2.53 101 850135134 1.63 0.99 50.71 584.15 0.14 0.82 0 0 good -0.55 63.47 0.02 nan 0.03

951764276 1.14734 100 -0.2 0.02 1.15 2.61 0.65 1.35 154.86 107 0.94 102 850135134 3.86 0.98 45.41 135.73 0.04 nan 0.49 0 noise -0.07 42.43 0.01 -0.02 0.82

951764279 10.7474 60 0.34 0.13 10.75 4.73 0.97 0.55 227.53 108 3.17 103 850135138 0.68 0.99 24.86 77.78 0.67 0.21 0.01 0 good 0.41 73.46 0 -0.11 0.02

951764284 4.16102 90 -0.69 0.13 4.16 2.12 0.55 0.55 144.3 109 1.75 104 850135138 0.86 0.99 50.53 235.54 0.36 0.29 0.5 0.01 good -0.2 36.42 0.05 -0.07 0.39

951764287 4.80088 70 -0.62 0.14 4.8 3.13 0.68 1.09 150.94 110 1.3 105 850135140 23.67 0.99 24 151.53 0.11 nan 0.21 0 good 0 42.61 0.02 -0.01 0.23

951764293 16.162 80 -1.03 0.07 16.16 5.22 0.98 1.35 208.23 111 1.89 106 850135140 2.98 0.99 24.74 76.61 0.11 nan 0 0 good -0.27 85.28 0 -0 0.03

951764299 9.321 80 0.21 0.07 9.32 3.38 0.95 1.5 150.14 112 1.39 107 850135140 6.74 0.99 16.04 114.15 0.08 nan 0.31 0.01 good 0.62 69.55 0 -0.09 0.07

951764305 12.0763 60 -1.44 0.13 12.08 3 0.96 1.46 152.91 113 1.67 108 850135142 1.84 0.99 32.6 102.77 0.09 1.13 0.03 0 good 1.03 72.79 0 nan 0.07

951764312 13.7481 50 -0.69 0.13 13.75 3.36 0.84 1.47 233.83 115 2.63 109 850135142 1.02 0.99 34.64 85.71 0.09 0.78 0 0.01 good 0.21 50.64 0.03 -0.01 0.02

951764319 2.25282 90 -1.1 0.05 2.25 2.45 0.35 1.51 267.34 116 2.65 110 850135142 1.07 0.99 17.01 223.55 0.12 0.76 0.03 0 good -0.29 26.04 0.12 nan 0.33

951764325 14.3434 60 -0.41 0.13 14.34 4.66 0.97 1.33 232.55 117 1.96 111 850135146 3.02 0.99 28.98 84.5 0.15 1.25 0.01 0 good 0.34 71.41 0 -0.01 0.03

951764332 6.43751 70 -0.96 0.26 6.44 4.59 0.97 1.4 313.16 118 3.19 112 850135148 1.68 0.99 38.3 84.53 0.16 1.22 0 0 good 0 57.14 0 0 0.02

951764338 6.4588 80 -0.89 0.09 6.46 3.03 0.82 1.22 137.86 119 1.52 113 850135148 9.42 0.99 32.3 160.44 0.06 nan 0.5 0.01 good -0.41 54.14 0.01 -0.01 0.35

951764344 12.0088 70 -0.41 0.14 12.01 3.45 0.53 1.26 170.19 120 2.03 114 850135150 8.49 0.99 17.23 94.34 0.09 nan 0.09 0.03 good 0.14 47.58 0.03 -0 0.16

951764350 10.6634 80 -1.25 0.06 10.66 2.7 0.6 1.54 102.21 121 1.18 115 850135150 5.14 0.99 28.25 208.47 0.08 nan 0.3 0.02 good 0 42.69 0.03 nan 0.13

951764357 11.6866 70 -0.21 0.04 11.69 2.57 0.47 1.14 149.83 122 1.75 116 850135150 9.84 0.99 29.84 124.9 0.09 nan 0.29 0.02 good 0.34 40.96 0.04 -0 0.32

951764364 6.12068 80 -0.48 0.04 6.12 2.44 0.48 1.41 185.55 123 2.4 117 850135150 5.61 0.99 11.09 141.89 0.1 nan 0.21 0.01 good 0 33.84 0.05 -0.02 0.41

951764370 14.4044 60 -0.62 0.15 14.4 5.44 0.98 0.48 256.02 124 3.28 118 850135152 0.43 0.99 26.14 74.55 0.83 0.22 0 0 good 0 75.75 0 -0.13 0.02

951764374 4.53159 70 -0.41 0.05 4.53 2.88 0.74 0.58 129.24 125 1.54 119 850135152 0.42 0.99 23.47 155.69 0.38 0.22 0.5 0.01 good 0.34 48.01 0.01 -0.05 0.26

951764379 4.49532 50 -1.72 0.18 4.5 3.39 0.35 0.62 192.49 126 2.63 120 850135156 0.69 0.99 30.38 187.65 0.45 0.25 0.06 0.01 good 0.69 35.32 0.03 -0.09 0.11

951764385 8.29497 50 -2.06 0.02 8.29 4.39 0.64 0.67 183.15 127 2.55 121 850135156 0.68 0.99 26.3 113.55 0.43 0.23 0.01 0.02 good 0.69 56.15 0.01 -0.1 0.06

951764390 3.70893 90 -1.14 0.06 3.71 4.08 0.27 0.65 216.27 128 2.86 122 850135156 0.68 0.99 23.48 132.5 0.44 0.29 0.02 0 good 0.41 32.07 0.03 -0.06 0.12

951764396 5.75643 70 -0.34 0.08 5.76 3.36 0.82 0.58 161.44 129 2.42 123 850135156 0.74 0.99 22.4 168.78 0.4 0.27 0.5 0 good 0.34 57.67 0.01 -0.08 0.06

951764403 1.74472 170 0.78 0.04 1.74 3.14 0.75 1.11 39.29 130 0.56 124 850135156 1.86 0.73 43.2 300.61 0 nan 0.5 0 noise 1.16 47.05 0 -0 0.19

951764409 2.02507 70 -0.69 0.06 2.03 2.57 0.3 0.55 186.02 131 2.53 125 850135156 0.52 0.99 14.65 222.21 0.57 0.18 0.46 0 noise 0 33.19 0.02 -0.07 0.26

951764415 22.8442 60 -0.34 0.22 22.84 5.03 0.98 1.44 394.15 132 3.57 126 850135158 1.3 0.99 24.85 55.77 0.23 0.77 0 0 good 0.14 73.29 0.01 -0.02 0.01

951764421 11.9241 80 0 0.11 11.92 2.85 0.95 1.35 190.96 133 2.13 127 850135158 5.75 0.99 22.18 107.95 0.1 nan 0.1 0 good 0.48 67.95 0 -0 0.04

951764426 2.71742 60 0 0.04 2.72 2.13 0.34 1.21 216.72 134 1.98 128 850135158 3 0.95 18.87 112.71 0.13 1.59 0.23 0.01 noise 0.41 33.84 0.02 -0.01 0.25

951764432 14.0462 60 0 0.04 14.05 2.78 0.81 1.3 257.67 135 2.43 129 850135158 2.32 0.99 15.88 84.43 0.17 1.37 0.05 0.01 good 0.21 61.72 0.01 -0.02 0.17

951764438 22.7161 50 0 0.14 22.72 4.93 0.96 0.38 269.76 136 3.31 130 850135160 0.47 0.99 23.52 68.4 1 0.16 0 0 good 0 89.63 0 -0.18 0.02

951764443 14.2488 60 -0.14 0.09 14.25 3.93 0.95 1.61 201.23 137 2.01 131 850135162 1.09 0.99 21.46 123.04 0.12 0.84 0.01 0 good 0.34 65 0.01 nan 0.06

951764447 3.7575 40 -0.34 0.34 3.76 5.66 0.98 1.19 411.01 139 4.19 132 850135164 2.63 0.99 20.21 58.61 0.26 0.78 0 0 good 0 79.97 0 -0 0

951764454 8.93462 70 -0.34 0.17 8.93 2.77 0.87 1.52 182.8 140 1.78 133 850135164 30.45 0.99 29.2 157.45 0.11 nan 0.11 0 good 0.34 50.85 0.01 -0.04 0.11

951764460 20.9761 50 0 0.18 20.98 4.19 0.94 0.48 301.04 141 3.29 134 850135166 0.31 0.99 30.39 71.88 0.96 0.25 0 0.01 good 0 77.75 0.01 -0.17 0.02

951764466 3.91136 70 0 0.06 3.91 2.22 0.4 0.49 210.82 143 2.19 135 850135166 0.24 0.99 14.37 187.8 0.68 0.27 0.5 0 good 0 34.29 0.03 -0.11 0.3

951764472 15.2495 60 0 0.11 15.25 3.7 0.95 0.47 224.32 144 2.61 136 850135168 0.72 0.99 24.11 91.85 0.72 0.21 0.02 0 good 0.34 90.81 0 -0.11 0.01

951764478 17.1805 50 -0.34 0.21 17.18 5.34 0.98 0.51 364.67 146 3.48 137 850135170 0.4 0.99 24.11 52.17 1.1 0.25 0 0 good 0.34 96.03 0 -0.18 0

951764484 5.14984 60 -0.76 0.12 5.15 3.24 0.85 0.56 164.88 147 1.8 138 850135172 0.28 0.99 35.82 201.39 0.37 0.32 0.5 0 good 0.69 46.7 0.01 -0.08 0.07

951764490 3.234 50 -0.69 0.22 3.23 4.18 0.86 0.45 388.57 148 3.8 139 850135172 0.46 0.99 25.66 90.47 1.3 0.22 0 0 good 0.69 39.48 0.01 -0.3 0.14

951764496 10.2851 60 0.62 0.06 10.29 3.91 0.88 0.49 183.02 149 2.15 140 850135172 0.28 0.99 35.99 104.34 0.54 0.32 0 0.01 good 1.03 63.62 0.01 -0.13 0.04

951764503 20.7697 60 -0.34 0.18 20.77 4.33 0.98 0.45 319.22 150 2.99 141 850135170 0.45 0.99 31.78 87.22 1.02 0.25 0 0 good 0.27 75.24 0 -0.17 0.02

951764508 3.69643 60 0 0.07 3.7 3.15 0.9 1.61 236.92 151 1.62 142 850135174 16.57 0.99 5.65 179.31 0.14 nan 0.5 0 good 0.69 49.97 0 nan 0.39

951764514 12.4374 60 0 0.18 12.44 4.06 0.95 0.54 288.36 152 2.58 143 850135170 0.37 0.99 28.02 112.88 0.78 0.26 0 0 good 0.55 63.79 0 -0.12 0.04

951764520 10.2671 60 0.34 0.13 10.27 3.35 0.95 0.87 173.07 153 2.12 144 850135176 1.35 0.99 13.97 124.88 0.12 0.67 0.11 0 good 1.37 70.56 0 -0 0.08

951764525 5.52423 50 -2.06 0.09 5.52 3.89 0.94 1.03 141.4 154 0.96 145 850135174 1.98 0.99 14.46 162.9 0.1 nan 0.12 0 good 2.06 72.36 0 -0.02 0.03

951764531 12.6207 20 0 0.38 12.62 7.11 0.99 0.54 605.84 155 5.77 146 850135180 0.52 0.99 10.36 28.82 1.88 0.21 0 0 noise nan 75.96 0 -0.34 0

951764537 11.6708 110 0.59 0.09 11.67 nan nan 0.47 59.14 156 0.46 147 850135180 0.47 0.99 53.49 326.22 0.13 0.26 0.24 0 noise 1.02 nan nan -0.04 0.25

951764543 3.67886 50 0.21 0.12 3.68 2.35 0.46 0.48 234.65 157 2.38 148 850135180 0.63 0.99 24.76 279.28 0.7 0.29 0.5 0.01 good 0.69 37.28 0.02 -0.13 0.24

951764550 4.92685 40 0.14 0.03 4.93 2.52 0.56 0.47 224.94 158 2.28 149 850135180 0.58 0.99 33.29 176.93 0.67 0.27 0.5 0.01 good nan 47.92 0.01 -0.14 0.16

951764557 9.14284 70 -6.59 0.07 9.14 2.31 0.79 0.52 77.68 159 0.86 150 850135180 0.5 0.99 18.49 229.16 0.23 0.29 0.28 0.01 good -0.69 66.24 0.01 -0.06 0.29

951764563 10.5269 30 0.34 0.29 10.53 5.38 0.88 0.41 484.4 160 3.13 151 850135182 0.99 0.99 12.41 42.55 1.66 0.15 0 0 good nan 54.51 0.01 -0.34 0.01

951764570 11.5479 60 -0.21 0.1 11.55 4.41 0.98 1.52 239.75 161 1.5 152 850135182 1.97 0.99 35.03 106.12 0.14 0.89 0.01 0 good 0.34 71.52 0 -0.09 0.03

951764576 6.09557 160 2.4 0.07 6.1 nan nan 1.11 39.32 162 0.1 153 850135182 1.28 0.99 43.9 810.05 0.03 nan 0.49 0 noise 1.12 nan nan -0.01 0.24

951764582 3.42961 170 0.47 0.02 3.43 nan nan 0.88 39.19 163 0.25 154 850135182 2.26 0.99 84.01 917.58 0.06 1.46 0.5 0 noise -0.4 nan nan 0 0.08

951764589 5.32965 160 0.79 0.02 5.33 nan nan 1.18 48.64 164 0.16 155 850135182 2.38 0.99 48.75 1006.26 0.01 1.4 0.41 0 noise 0.84 nan nan -0.02 0.09

951764595 6.93115 160 -0.39 0.01 6.93 3.32 0.88 1.09 49.7 165 0.24 156 850135182 10.4 0.97 72.91 674.81 0 1.65 0.35 0.01 noise 0.67 70 0 nan 0.13

951764601 3.70697 130 1.27 -0 3.71 nan nan 1.25 55.59 166 0.29 157 850135182 7.79 0.98 82.49 1050.73 0.05 1.19 0.5 0 noise -2.79 nan nan -0.03 0.1

951764608 18.6999 60 0.27 0.15 18.7 3.01 0.93 1.04 230.74 167 1.47 158 850135182 1.83 0.99 26.88 91.9 0.13 1.22 0.01 0.01 good 0 66.6 0.01 -0.01 0.03

951764614 15.2261 50 -1.37 0.09 15.23 4.43 0.96 0.48 197.19 168 2.92 159 850135186 0.87 0.99 14.87 82.23 0.61 0.22 0.01 0 good 0 64.97 0.01 -0.14 0.02

951764618 1.6015 70 0 0.14 1.6 2.98 0.44 0.52 207.03 169 2.77 160 850135186 0.84 0.99 16.35 273 0.61 0.22 0.04 0 good 0.21 26.8 0.06 -0.11 0.27

951764622 13.3591 40 -0.69 0.07 13.36 3.36 0.88 1.51 241.36 170 1.53 161 850135182 2 0.99 18.59 94.42 0.18 0.73 0.01 0.01 good 0.34 52.06 0.02 -0.06 0.05

951764624 13.0301 50 -0.34 0.15 13.03 2.96 0.93 0.44 173.5 171 2.62 162 850135188 0.28 0.99 17.01 101.88 0.59 0.29 0.5 0 good 0 71.24 0.01 -0.12 0.25

951764626 11.5823 20 nan 0.07 11.58 4.43 0.95 0.44 240.42 172 3.42 163 850135188 0.36 0.99 27.46 72.69 0.81 0.25 0 0 good 1.37 59.18 0.01 -0.16 0.01

951764630 7.20716 40 0 0.12 7.21 4.51 0.93 0.45 197.09 173 2.55 164 850135190 0 0.99 23.69 166.24 0.67 0.29 0.2 0 good 0.34 54.75 0.01 -0.12 0.23

951764635 5.02522 50 0.34 0.27 5.03 7.53 1 0.49 347.15 174 5.55 165 850135192 0.42 0.99 25.15 55.36 1.11 0.19 0 0 good 0.69 92.62 0 -0.19 0

951764639 10.251 60 -7.07 0.09 10.25 2.48 0.49 0.48 112.36 175 1.44 166 850135196 0.42 0.99 67.16 281.5 0.33 0.3 0.14 0.02 good 0 39.14 0.05 -0.07 0.08

951764644 13.5192 50 0.14 0.01 13.52 3.09 0.67 0.48 172.02 176 2.4 167 850135196 0.47 0.99 31.34 112.85 0.5 0.29 0.15 0.03 good -0.69 57.46 0.02 -0.1 0.03

951764648 8.21975 40 0 0.15 8.22 5.73 0.98 0.4 329.23 177 4.27 168 850135196 0.48 0.99 19.13 71.28 1.19 0.18 0 0 good -0.69 69.88 0 -0.23 0.01

951764652 6.23229 60 -1.99 0.08 6.23 4.13 0.85 0.47 163.54 178 2.11 169 850135196 0.41 0.99 15.8 227.39 0.54 0.23 0.5 0.01 good 0 60.61 0.01 -0.11 0.12

951764656 5.28139 50 -1.17 0.04 5.28 2.15 0.34 0.54 85.26 179 1 170 850135196 0.49 0.99 24.37 331.74 0.21 0.38 0.5 0.01 noise 0.69 34.98 0.05 -0.06 0.11

951764663 0.00093 160 2.36 nan 0 3.85 nan 0.22 221.89 180 2.75 171 850135196 1.11 0.09 nan 0 0.96 0.14 0.36 0 noise 2.66 4.48336e+12 nan -0.29 0

951764669 3.9993 70 -0.14 0.19 4 4.38 0.52 1.68 170.8 181 1.45 172 850135198 3.39 0.99 14.31 147.96 0.1 nan 0.13 0.01 good 0.21 45.22 0.02 nan 1.4

951764675 4.10233 70 -0.21 0.02 4.1 4.21 0.57 0.95 156.97 182 1.16 173 850135198 3.37 0.99 15.12 155.57 0.12 nan 0.2 0.01 good 0.21 48.58 0.01 -0.01 1.6

951764681 1.56647 70 -0.27 0.18 1.57 2.84 0.8 1.29 167.88 183 2.09 174 850135200 1.27 0.99 35.17 286.59 0.11 0.66 0.14 0 good 0.69 43.89 0.01 -0.01 0.32

951764688 1.50726 70 -0.34 0.13 1.51 4.1 0.72 1.32 185.03 184 1.67 175 850135198 3.31 0.99 16.1 176.19 0.12 nan 0.02 0 good 0.14 35.9 0.03 -0.01 1.14

951764694 2.66358 90 -0.07 0.05 2.66 2.63 0.75 0.44 110.23 185 2.32 176 850135202 0.44 0.99 19.57 265.47 0.39 0.22 0.5 0 good -0.14 41.73 0.02 -0.07 0.57

951764700 4.42691 70 -1.99 0.14 4.43 6.33 0.99 0.49 102.15 186 1.76 177 850135204 0.13 0.99 19.77 168.42 0.29 0.37 0.35 0 good -0.55 80.47 0 -0.05 0.12

951764706 10.0951 70 -0.21 0.09 10.1 4.84 0.95 0.43 159.53 187 3.04 178 850135206 0.26 0.99 10.66 84.31 0.6 0.18 0.01 0.01 good 0.21 60.83 0.01 -0.09 0.08

951764714 3.74241 80 0.06 0.07 3.74 4.04 0.86 0.49 115.39 188 2.22 179 850135206 0.33 0.99 12.39 269.85 0.38 0.26 0.5 0 good 0 54.61 0 -0.07 0.22

951764718 9.8384 60 -0.21 0.14 9.84 3.6 0.74 0.44 154.54 189 2.32 180 850135210 0.75 0.99 18.21 178.03 0.55 0.15 0.17 0.01 noise 0 55.11 0.02 -0.12 0.28

951764724 10.5901 70 -0.34 0.18 10.59 4.24 0.97 0.41 130.7 191 2.78 181 850135212 0.31 0.99 6.04 149.58 0.48 0.3 0.01 0 good 0 65.46 0 -0.09 0.04

951764731 12.0302 50 0.34 0.16 12.03 5.05 0.96 0.59 99.59 192 2.16 182 850135212 0.18 0.99 10.22 230.73 0.23 0.32 0.04 0.01 good 1.72 88.04 0 -0.05 0.06

951764739 1.05082 80 0.2 0.03 1.05 3.43 0.07 0.55 99.4 193 2.24 183 850135212 0.09 0.89 28.31 142.88 0.27 0.32 0.1 0 noise 1.72 25.5 0.04 -0.04 0

951764746 0.00227 170 -0.54 0.1 0 4.6 nan 0.54 120.51 194 0.88 184 850135170 0.18 0.18 nan 0 0.47 0.22 0.01 0 noise 0.23 8.69384e+12 nan -0.2 0

951764753 9.52085 100 -0.76 0.05 9.52 nan nan 1.47 81.66 195 1.14 185 850135214 2.19 0.99 73.81 363.01 0.05 1.87 0.5 nan good 0 nan 0 -0.02 0.6

951764761 8.88574 90 -0.18 0.07 8.89 nan nan 1.55 80.33 196 0.51 186 850135214 3.12 0.99 80.94 522.26 0.02 nan 0.32 0 noise -0.2 nan nan nan 1.07

951764768 9.13933 30 -4.12 0.13 9.14 5.07 0.94 1.65 124.5 197 1.88 187 850135214 1.29 0.99 12.05 218.02 0.07 0.67 0.03 0.01 good 3.43 75.02 0.01 -0.06 0.06

951764776 3.37133 40 -0.34 0.12 3.37 nan nan 1.19 168.29 198 1.75 188 850135216 4.85 0.99 13.94 272.98 0.13 0.95 0.03 0 good 0.69 nan nan -0.01 0.34

951764783 23.1542 60 -0.34 0.12 23.15 nan nan 1.8 108.62 199 1.16 189 850135216 5.81 0.99 21.1 281.45 0.07 nan 0.06 0 good 0 nan nan -0.01 0.01

951764791 5.49003 40 -0.34 0.03 5.49 nan nan 1.24 215.03 200 2.34 190 850135216 2.86 0.99 17.19 176.86 0.17 0.85 0 0 good 0 nan nan -0.01 0.04

951764798 10.1731 140 0.15 0.02 10.17 nan nan 1.09 44.36 201 0.33 191 850135216 1.44 0.88 112.47 900.56 0.01 nan 0.5 nan good 0.19 nan 0 nan 0.22

951764809 20.4946 100 0.45 0.06 20.49 nan nan 0.96 88.46 202 0.55 192 850135218 0.12 0.99 100.51 745.32 0.16 0.93 0.5 nan noise 3.66 nan 0 -0 0.28

951764815 0.83423 40 0.14 0 0.83 2.52 0.33 1.55 87.73 203 0.67 193 850135220 6.78 0.99 19.38 250.21 0.04 nan 0.5 0 noise nan 30.85 0.03 nan 0.39

951764821 0.83144 60 -0.33 0.03 0.83 nan nan 1.48 93.33 204 1.25 194 850135220 13.11 0.99 23.91 360.76 0.06 nan 0.5 nan noise nan nan 0 -0 0.45

951764827 0.0031 170 0.22 nan 0 nan 1 1.32 71.84 205 0.67 195 850135196 0.8 0.18 nan 0 0.11 1.32 0.5 nan noise -0.09 nan nan -0.03 0

951764835 9.1322 170 1.03 0.01 9.13 nan nan 2.1 18.21 206 0.18 196 850135270 1.31 0.99 134.42 1226.98 0.01 0.47 0.5 0 noise -1.97 nan nan -0.03 0.92

951764842 2.50341 60 0.69 0.12 2.5 3.24 0.77 0.56 52.04 207 0.71 197 850135318 0.39 0.99 35.89 522.61 0.11 1 0.5 0.01 good 0.46 42.86 0.09 -0 6.18

951764848 0.71105 70 0.69 0.23 0.71 3.24 0.81 1.68 152.11 208 1.68 198 850135324 2.57 0.99 30.32 279.83 0.03 nan 0 0 good 2.01 43.36 0.02 -0.03 1.46

951764855 4.23894 100 0.89 0.08 4.24 2.84 0.87 0.76 62.2 209 1.43 199 850135332 0.38 0.99 34.3 386.55 0.17 0.23 0.42 0.01 good 2.58 48.46 0.03 -0.02 1.2

951764862 2.10123 60 0.34 0.19 2.1 3.77 0.88 0.47 107.69 210 2.09 200 850135334 0.2 0.99 27.73 146.3 0.38 0.22 0.01 0 good 0.96 51.56 0.01 -0.03 0.19

951764868 13.3805 70 0 0.17 13.38 4.07 0.97 0.32 114.51 211 2.43 201 850135334 0.33 0.99 40.84 106.77 0.45 0.15 0.01 0.02 good 0.21 77.68 0.01 -0.1 0.07

951764875 1.47967 70 0.96 0.16 1.48 4.41 0.91 0.76 139.71 212 2.7 202 850135334 0.16 0.99 38.05 250.14 0.46 0.22 0.01 0 good 1.65 49.67 0.01 -0.02 0.85

951764883 3.91798 170 0.84 0.06 3.92 2.83 0.47 0.66 30.22 213 0.64 203 850135338 0.49 0.99 39.03 698.98 0.03 1.3 0.5 0.02 noise 1.13 31.63 0.09 -0.02 1.67

951764889 7.6392 170 -0.4 0.1 7.64 4.4 0.94 0.92 18.94 214 0.43 204 850135338 0.63 0.99 58.52 1128.71 0.01 nan 0.5 0 noise -1.82 60.31 0.02 -0.01 0.57

951764895 5.06408 90 -0.75 0.11 5.06 3.45 0.9 0.27 66.73 215 1.69 205 850135340 0.6 0.99 47.03 320.29 0.28 0.12 0.08 0.01 good 0.48 52.68 0.02 -0.01 0.12

951764903 6.99842 70 -0.69 0.16 7 4.24 0.95 0.54 88.92 216 2.45 206 850135340 0.56 0.99 38.71 114.38 0.27 0.22 0.01 0.01 good 0.48 67.34 0.01 -0.03 0.08

951764909 3.95053 60 0 0.07 3.95 2.51 0.91 0.92 87.78 217 2.13 207 850135340 0.43 0.99 33.65 240.48 0.2 0.21 0.5 0 noise 2.82 50.2 0.02 -0.02 0.02

951764916 0.01033 150 -5.08 nan 0.01 3.69 0 0.71 75.75 218 1.75 208 850135340 0.69 0.54 nan 0 0.17 0.23 0.5 0 noise 0.17 36.23 0 -0.04 0

951764923 0.00558 170 -0.24 nan 0.01 4.02 nan 0.81 65.82 219 1.31 209 850135340 0.48 0.39 nan 0 0.17 0.29 0.38 0 noise 1.98 56.03 0 -0.02 0

951764928 0.00765 120 0.69 nan 0.01 3.98 nan 0.71 90.28 220 1.97 210 850135340 0.34 0.36 nan 0 0.24 0.19 0.38 0 noise 1.57 30.19 0 -0.03 0

951764935 0.01354 120 -0.07 nan 0.01 3.24 0 0.6 76.25 221 1.75 211 850135340 0.67 0.63 nan 0 0.21 0.22 0.5 0 noise 1.13 25.74 0 -0.03 0

951764939 0.00827 140 -2.55 nan 0.01 3.41 nan 0.69 85.12 222 1.91 212 850135340 0.44 0.51 nan 0 0.24 0.21 0.46 0 noise 2.19 27.52 0 -0.01 0

951764944 4.10667 120 -0.37 0.08 4.11 2.76 0.71 0.32 65.35 223 1.09 213 850135342 0.17 0.99 42.39 350.63 0.27 0.8 0.5 0.01 noise -0.01 42.39 0.05 -0.04 2.35

951764951 2.11601 60 -0.1 0.05 2.12 nan nan 0.96 94.86 224 1.72 214 850135342 0.11 0.99 46.58 1667.7 0.07 0.71 0.49 0 good -0.69 nan nan -0.01 0.09

951764958 2.80133 120 0.78 0.01 2.8 2.87 0.69 1.1 30.85 225 0.39 215 850135344 0.49 0.99 59.48 1235.88 0.01 nan 0.5 0.06 noise 1.05 66.99 0.02 nan 0.16

951764964 4.36522 120 1.18 0.03 4.37 1.94 0.38 1.14 30.17 226 0.25 216 850135326 1.77 0.92 79.98 675.41 0 nan 0.23 0.04 noise -0.56 35.29 0.07 -0.01 0.08

951764971 1.73925 120 -0.51 0.02 1.74 3.14 0.49 0.65 40.53 227 0.45 217 850135344 0.62 0.75 68.39 586.07 0.04 nan 0.34 0.01 noise -0.54 36.95 0.04 -0.04 0.27

951764977 3.93389 160 -0.44 0.02 3.93 2.41 0.35 0.88 27.64 228 0.38 218 850135344 0.42 0.99 83.86 1007.86 0.03 1.36 0.5 0.03 noise 1.12 36.67 0.07 -0.01 0.15

951764983 3.26614 170 -1.48 -0.06 3.27 nan nan 1.02 31.53 229 0.26 219 850135326 1.64 0.99 38.25 1008.08 0.03 nan 0.5 0 noise -1.4 nan nan -0.02 0.17

951764990 1.58476 170 2.39 0.02 1.58 nan nan 0.96 24.14 230 0.4 220 850135344 0.29 0.99 74.77 1473.81 0.01 nan 0.5 0 noise 1.58 nan nan -0 6.56

951764995 1.82656 140 1.16 0.03 1.83 3.27 0.47 1.03 43.89 231 0.64 221 850135344 0.53 0.62 43.18 609.47 0.02 nan 0.19 0.03 noise 1.43 43.98 0.04 nan 0.14

951765001 0.65494 170 1.03 -0 0.65 1.35 0.04 1.5 28.63 232 0.39 222 850135344 0.63 0.66 34.41 608.55 0.02 nan 0.5 0.01 noise -2.25 21.23 0.21 -0.1 2.62

951765009 18.7407 30 0 0.18 18.74 7.7 1 0.49 173.8 233 3.44 223 850135356 0.06 0.99 34.7 55.38 0.65 0.16 0 0 good 1.37 124.54 0 -0.06 0

951765017 1.72437 160 -3.95 0.03 1.72 nan nan 2.47 45.76 234 0.69 224 850135372 4.91 0.99 67.1 1747.95 0.04 1.33 0.48 0 noise 2.67 nan nan nan 2.76

951765023 2.14391 60 0 0.12 2.14 6.34 0.98 0.58 120.35 235 1.8 225 850135374 0.14 0.99 27.18 212.42 0.41 0.19 0.02 0 good -0.96 69.21 0 -0.04 0.15

951765031 6.40951 140 2.33 -0.01 6.41 1.22 0.33 1 27.72 236 0.28 226 850135368 2.09 0.99 66.78 819.7 0.04 1.39 0.5 0.16 noise -1.16 36.92 0.22 -0.01 0.11

951765039 6.89932 140 2.37 0 6.9 1 0.38 2.2 25.64 237 0.25 227 850135368 1.95 0.99 87.73 1010.54 0 nan 0.37 0.15 noise -0.03 38.77 0.21 -0.38 0.08

951765044 6.80167 120 2.05 -0 6.8 2.41 0.5 1.04 38 238 0.31 228 850135368 4.18 0.99 89.46 968.25 0.01 1.14 0.5 0.05 noise -1.64 45.06 0.06 -0.01 0.08

951765049 0.0031 80 0.41 nan 0 3.47 nan 0.69 240.3 239 2.63 229 850135370 0.4 0.18 nan 0 0.95 0.14 0.01 0 noise 1.44 1.40477e+13 nan -0.07 0

951765055 0.04092 40 0.69 nan 0.04 2.32 0.29 0.65 205.41 240 2.46 230 850135370 0.46 0.77 8.3 3.5 0.82 0.14 0.34 0 noise 1.03 27.43 0.05 -0.07 0

951765061 0.04764 70 0 nan 0.05 6.2 0.91 0.62 321.87 241 4.77 231 850135372 0.22 0.69 9.68 13.22 1.13 0.11 0 0 good 1.18 42.23 0 -0.12 0

951765067 1.06364 100 0.16 0.05 1.06 2.1 0.66 0.66 62.35 242 0.95 232 850135372 0.62 0.99 55.37 454.97 0.23 0.16 0.5 0.01 good -2.2 35.52 0.09 -0.01 2.57

951765074 0.86037 70 0.34 0.01 0.86 3.49 0.51 0.22 133.16 243 1.75 233 850135372 0.37 0.96 27.8 124.64 0.57 0.12 0.5 0.01 noise -0.76 36.03 0.08 -0.07 14.96

951765080 3.61148 160 -3.12 -0.03 3.61 1.89 0.51 0.03 62.79 244 0.71 234 850135376 1.93 0.99 33.71 415.94 0.6 nan 0.5 0.03 noise 3.24 29.56 0.36 nan 3.62

951765085 0.00713 80 -0.34 nan 0.01 2.55 0 0.7 203.93 245 1.79 235 850135372 0.2 0.4 nan 0 0.62 0.16 0.35 0 noise 4.43 35.61 0 -0.04 0

951765093 0.0125 30 0.69 nan 0.01 7 0.56 0.63 715.89 246 2.7 236 850135376 0.69 0.49 nan 0 0.74 0.11 0.31 0 noise nan 69.88 0 -0.12 0

951765098 0.62353 80 0.62 0.07 0.62 4.45 0.61 0.65 96.24 247 1.8 237 850135380 0.13 0.99 34.92 552.24 0.35 0.18 0.12 0 good 2.27 34.89 0.07 -0.03 3.39

951765105 17.799 120 -2.42 -0.01 17.8 nan nan 1.19 39.68 248 0.18 238 850135368 2.37 0.99 53.48 788.29 0.01 nan 0.37 0 noise 1.15 nan nan nan 0.19

951765110 3.41453 160 0.05 0.01 3.41 2.5 0.69 0.04 44.13 249 0.49 239 850135376 12.17 0.99 30.93 679.96 0.38 nan 0.5 0.02 noise -0.93 43.2 0.09 nan 1.6

951765118 1.60491 150 4.32 -0.01 1.6 nan nan 2.02 47.36 250 0.52 240 850135388 32.09 0.99 50.88 1546.16 0.01 1.62 0.46 0 noise -9.75 nan nan -0.76 1.82

951765124 10.2191 120 -3.34 0.03 10.22 nan nan 1.06 36.19 251 0.31 241 850135368 3.68 0.99 27.06 457.71 0.01 nan 0.5 0 noise 1.23 nan nan nan 0.06

951765132 6.29749 120 1.21 0.03 6.3 1.77 0.25 1.07 41.41 252 0.38 242 850135390 1.21 0.99 67.16 800.86 0.05 0.8 0.5 0.05 noise -0.01 33.52 0.2 -0.01 0.08

951765138 4.64836 160 -2.88 -0 4.65 1.42 0.21 0.91 34.52 253 0.3 243 850135390 0.08 0.99 76.98 1010.7 0.02 nan 0.5 0.06 noise -0.84 33.01 0.17 nan 0.13

951765145 5.76635 80 0 0.11 5.77 5.83 0.99 0.55 112.37 254 1.17 244 850135390 0.31 0.99 33.48 140.59 0.37 0.19 0.02 0 good -4.33 65.76 0 -0.05 0.03

951765151 12.9111 40 -0.69 0.11 12.91 6.16 1 0.36 174.67 255 2.67 245 850135416 0.27 0.99 39.63 72.37 0.7 0.15 0.01 0 good 0.69 82.53 0 -0.04 0.01

951765159 25.3179 100 1.17 -0 25.32 1.86 0.76 1.57 34.79 256 0.1 246 850135424 1.84 0.99 33.89 464.32 0 nan 0.19 0.19 noise -3.31 94.62 0.12 -0.01 0.13

951765164 8.99611 170 -1.01 0.06 9 3.33 0.92 1.59 55.43 257 0.48 247 850135418 1.76 0.99 48.51 282.37 0.02 nan 0.5 0.07 noise 0.84 100.29 0.04 nan 1.33

951765170 5.02088 170 0.1 0.02 5.02 nan nan 2.02 31.36 258 0.23 248 850135430 0.79 0.99 54.83 754.73 0 nan 0.5 0 noise -0.19 nan nan -0.03 0.6

951765176 2.50662 70 -0.55 0.04 2.51 3.43 0.8 0.41 91.38 259 1.43 249 850135430 0.3 0.99 24.52 323.88 0.33 0.88 0.5 0.02 good -0.41 49.23 0.05 -0.03 1.87

951765182 10.2026 140 1.35 0.04 10.2 2.94 0.95 0.87 40.75 260 0.11 250 850135430 0.91 0.99 54.31 516.35 0.02 nan 0.5 0.03 good 2.98 82.95 0.01 -0.01 0.38

951765189 3.2618 100 -2.29 0.01 3.26 nan nan 0.93 44.59 261 0.47 251 850135426 4.06 0.98 27.44 302.37 0.02 nan 0.5 0 noise 2.4 nan nan nan 0.56

951765195 0.83537 170 0.57 -0.05 0.84 1.8 0.21 1.54 63 262 0.5 252 850135418 2.73 0.97 31.34 177.79 0.03 nan 0.5 0.02 noise 4.45 27.92 0.19 nan 21.15

951765200 0.81367 170 -0.79 0.05 0.81 2.6 0.37 0.96 45.71 263 0.54 253 850135450 5.67 0.99 79.05 876.67 0.03 nan 0.24 0 noise 5.15 28.74 0.12 nan 22.76

951765206 0.65071 130 -2.2 0.12 0.65 3.79 0.72 0.77 77.28 264 1.09 254 850135444 0.35 0.99 45.69 476.49 0.11 0.36 0.04 0 noise -1.02 28 0.1 -0.01 15.46

951765213 0.47751 50 0.34 0.12 0.48 4.18 0.98 1.47 120.86 265 1.57 255 850135450 1.97 0.99 61.81 131.62 0.07 nan 0 0 good 1.03 61.9 0 nan 0

951765219 0.00651 170 -3.23 nan 0.01 4.35 0 1.24 151.56 266 0.97 256 850135460 0.7 0.34 nan 0 0.27 0.27 0.5 0 noise -1.76 35.5 0 -0.04 0

951765225 5.07885 130 -3.71 0.16 5.08 3.02 0.92 0.73 63.69 267 1.3 257 850135454 0.16 0.99 28.04 430.28 0.15 0.32 0.34 0.01 good -0.78 53.42 0.04 -0.02 1.4

951765231 2.53317 100 -1.9 0.06 2.53 2.41 0.64 0.81 55.35 268 0.74 258 850135470 0.11 0.99 43.21 544.27 0.16 0.55 0.23 0.03 noise 0.14 48.87 0.03 -0.01 0.27

951765238 2.03313 120 -4.4 0.02 2.03 2.19 0.45 0.45 41.71 269 0.68 259 850135452 5.59 0.99 60.02 654.48 0.07 0.6 0.34 0.02 noise -1.11 33.45 0.1 -0.04 0.68

951765243 5.11461 100 -1.37 0.06 5.11 2.67 0.87 0.67 91.63 270 1.57 260 850135460 0.81 0.99 65.38 409.3 0.23 0.21 0.01 0.01 good -0.66 54.71 0.02 -0.02 0.45

951765247 7.2672 150 -2.46 0.06 7.27 1.62 0.69 1 57.28 271 0.64 261 850135470 0.17 0.99 82.77 343.01 0.08 0.45 0.5 0.02 noise 0.02 42.76 0.04 -0.03 1.05

951765252 4.16857 120 -1.44 0.1 4.17 3.6 0.85 0.89 151.42 272 1.77 262 850135470 0.14 0.99 64.42 347.29 0.43 0.25 0 0.01 good 0.14 40.98 0.05 -0.02 0.44

951765258 9.5782 80 -2.67 0.08 9.58 2.63 0.81 0.93 81.69 273 0.95 263 850135470 0.07 0.99 56.75 226.82 0.18 0.29 0.4 0.03 good 0.34 51.31 0.05 -0 0.21

951765264 2.62855 90 -0.77 0.19 2.63 4.22 0.93 0.87 196.8 274 2.09 264 850135478 0.36 0.99 63.37 347.09 0.56 0.19 0 0 good 1.99 59.42 0 -0.04 0.11

951765270 2.10805 70 0.69 0.08 2.11 3.48 0.95 0.34 103.97 275 1.68 265 850135466 0.87 0.99 38.91 270.23 0.45 0.14 0.41 0 good 0.76 55.06 0 -0.08 0.48

951765276 6.53124 100 -1.92 0.02 6.53 2.23 0.65 0.78 105.64 276 1.28 266 850135470 0.15 0.99 80.68 300.26 0.26 0.29 0.47 0.02 good -0.14 38.09 0.06 -0.02 0.5

951765282 2.79348 80 -1.03 0.19 2.79 6.02 0.99 0.77 262.93 277 3.2 267 850135470 0.21 0.99 67.61 221.04 0.79 0.18 0 0 good 0.21 76.77 0 -0.08 0

951765288 9.12827 50 -0.76 0.09 9.13 3.9 1 1.07 284.26 278 1.16 268 850135474 0.12 0.99 23.62 74.75 0.27 0.33 0 0 good -0.69 83.49 0 -0 0

951765293 5.05044 70 -0.62 0.12 5.05 3.69 0.99 0.73 173.58 279 2.45 269 850135472 0.15 0.99 50.96 209.01 0.55 0.16 0 0 good -0.89 72.1 0 -0.04 0.04

951765299 4.3677 70 -1.03 0.16 4.37 6.08 0.98 0.8 274.42 280 2.88 270 850135474 0.01 0.95 47.48 147.52 0.72 0.19 0 0 good -0.21 93.52 0 -0.01 0.04

951765303 6.53413 140 -1.84 0.03 6.53 1.58 0.56 0.87 46.29 281 0.46 271 850135478 0.63 0.99 94.98 383.23 0.08 0.29 0.38 0.02 good -0.2 34.5 0.06 -0.01 0.71

951765309 2.50352 70 -0.26 0.12 2.5 3.63 0.93 0.87 141.18 282 1.44 272 850135478 0.28 0.99 39.07 325.75 0.32 0.25 0.01 0 good -0.69 61.33 0 -0.04 0.15

951765314 9.83788 70 -1.74 0.07 9.84 3.74 0.96 0.87 111.1 283 1.27 273 850135478 0.24 0.99 70.31 149.92 0.33 0.15 0.02 0 good 0.34 65.22 0 -0.03 0.07

951765319 0.90471 70 -0.76 0.11 0.9 4.06 0.88 1.03 280.94 284 3.71 274 850135474 0.02 0.99 34.51 137.07 1 0.16 0 0 good -0.69 48.25 0 -0.03 0.09

951765323 3.66574 50 -0.69 -0.02 3.67 3.32 1 1.17 171.31 285 2.26 275 850135476 0 0.99 65.95 88.59 0.63 0.22 0 0 good 0.69 51.45 0.01 -0.05 0

951765329 4.50648 90 -1.18 0.1 4.51 2.84 0.82 0.96 97.5 286 1.49 276 850135476 0.04 0.96 56.93 218.51 0.29 0.26 0.05 0 good -1.03 48.39 0.01 -0.03 0.33

951765334 6.26566 70 -1.24 0.11 6.27 3.12 0.83 0.91 123.72 287 1.32 277 850135476 0.13 0.99 49.78 215.26 0.24 0.4 0.04 0 good -1.37 40.5 0.03 -0.02 0.98

951765339 1.33551 60 -1.1 0.07 1.34 4.55 0.88 0.8 241.56 288 2.99 278 850135480 0.31 0.99 72.34 214.3 0.63 0.14 0 0 good -0.69 53.86 0 -0.02 0.61

951765343 4.19781 70 -0.27 0.05 4.2 2.45 0.76 0.8 149.07 289 1.49 279 850135478 0.39 0.99 70.01 267.93 0.44 0.18 0.5 0.01 good 0 32.01 0.06 -0.03 1.05

951765349 10.8901 60 -0.69 0.11 10.89 3.44 0.98 0.7 188.92 290 2.1 280 850135478 0.36 0.99 61.77 214.53 0.57 0.14 0.01 0 good 0 66.33 0 -0.03 0.02

951765353 3.54545 110 -0.98 -0.01 3.55 1.79 0.81 0.89 71.82 291 1.02 281 850135480 0.08 0.99 63.94 311.37 0.2 0.25 0.1 0 good -0.62 41.48 0.02 -0.01 0.32

951765358 2.30501 80 -0.21 0.12 2.31 3.58 0.82 0.82 187.19 292 1.5 282 850135478 0.35 0.99 53.6 291.9 0.44 0.14 0 0 good 1.1 50.1 0 -0.04 0.15

951765364 39.3285 60 -0.27 0.06 39.33 4.18 1 0.26 181.77 293 2.62 283 850135482 0.54 0.99 64.71 91 0.81 0.16 0 0 good 0.69 81.96 0 -0.15 0

951765369 13.1377 70 -0.81 0.06 13.14 2.8 0.86 0.93 90.77 294 1.44 284 850135484 0.06 0.99 58.87 239.58 0.32 0.16 0.33 0.01 good nan 48.22 0.03 -0.01 0.4

951765374 0.32385 150 -2.14 0.02 0.32 2.53 0.1 1.47 179.54 295 0.77 285 850135478 0.72 0.99 57.11 291.03 0.07 0.99 0.11 0 noise -0.12 13.73 0.26 -0.08 191.29

951765379 2.20085 160 -0.65 0.01 2.2 2.33 0.19 1 71.64 296 0.46 286 850135478 1.68 0.99 80.64 763.1 0.03 1.55 0.36 0 noise 0.21 22.79 0.13 nan 14.53

951765385 0.00785 110 0.41 nan 0.01 3.99 nan 0.41 147.87 297 0.82 287 850135476 0.01 0.48 nan 0 0.45 0.22 0.4 0 good -0.62 27.41 0 -0.06 0

951765393 5.09063 160 -0.55 0.1 5.09 3.25 0.91 1.59 68.23 298 0.62 288 850135478 2.98 0.99 62.81 413.66 0.05 0.95 0.5 0 noise 0.65 49.39 0.01 nan 1.06

951765396 0.00289 160 -0.42 nan 0 4.32 nan 0.63 181.48 299 0.94 289 850135492 2.64 0.22 nan 0 0.04 nan 0.5 0 noise -1.02 2.85682e+12 nan nan 0

951765400 16.8106 140 3.02 0.04 16.81 2.98 0.81 1.19 40.22 300 0.29 290 850135494 1.42 0.99 46.83 492.07 0.02 nan 0.17 0.01 noise -2.51 60.96 0.02 -0.02 0.04

951765405 33.1885 80 -1.24 0.09 33.19 5.87 0.99 1.85 196.94 301 1.51 291 850135494 4.73 0.99 59.55 115.09 0.16 nan 0 0 good 0.62 85.28 0 nan 0.01

951765411 11.0497 130 -0.48 0.06 11.05 3.45 0.86 1 36.4 302 0.26 292 850135494 1.34 0.99 50.74 566.45 0.01 nan 0.45 0.01 noise 1.41 58.97 0.01 nan 0.11

951765414 5.89448 40 0.69 0.13 5.89 3.91 0.98 0.51 223.72 303 3.06 293 850135496 0.62 0.99 52.33 71.63 0.79 0.15 0 0 good 0.34 72.96 0 -0.08 0

951765420 13.1448 70 0.41 0.09 13.14 2.81 0.99 0.34 84.18 304 1.81 294 850135498 0.22 0.99 49.98 111.26 0.35 0.21 0.02 0 good 0.21 71.9 0 -0.06 0.01

951765423 14.1916 100 -0.35 0.1 14.19 3.32 0.98 0.44 59.55 305 1.73 295 850135500 0.54 0.99 45.27 140.5 0.23 0.18 0.19 0 good 0.2 82.86 0 -0.04 0.07

951765430 5.69876 110 -2.96 0.07 5.7 3.1 0.95 0.6 96.35 306 2.2 296 850135504 0.13 0.99 64.15 110.91 0.36 0.16 0 0 good 0.27 48.63 0.03 -0.02 0.11

951765435 19.8853 80 0 0.12 19.89 6.01 1 0.22 163.91 307 3.01 297 850135506 0.19 0.99 63.13 97.65 0.69 0.14 0 0 good 0 89.4 0 -0.16 0.02

951765440 9.97935 80 -3.57 0.06 9.98 1.95 0.5 0.36 65.64 308 1.24 298 850135520 0.97 0.99 56.14 917.5 0.15 0.22 0.37 0.02 good 0.41 40.54 0.06 -0.01 0.09

951765447 1.86221 60 -0.14 0.03 1.86 2.63 0.42 0.26 41.79 309 1.1 299 850135522 2.21 0.99 74.83 1479.83 0.19 0.14 0.5 0.01 good 0 32.71 0.04 -0.03 0.93

951765454 5.47349 130 0.67 0.02 5.47 2.72 0.49 1.3 48.24 310 0.81 300 850135526 0.48 0.98 51.92 1069.77 0 nan 0.46 0.02 good -0.29 36.22 0.05 nan 0.23

951765460 4.44892 70 -0.21 0.1 4.45 4.46 0.98 0.81 71.54 311 0.95 301 850135526 0.46 0.99 52.25 226.24 0.17 1.29 0.43 0 good -0.21 58.94 0 -0.01 0.23

951765467 9.98162 30 0 0.24 9.98 4.45 0.96 0.16 314.61 312 4.9 302 850135528 0.68 0.77 13.32 98.02 1.08 0.08 0 0 good 0 63.59 0.01 -0.48 0.01

951765473 4.10409 40 0 0.06 4.1 2.8 0.94 0.16 113.73 313 1.72 303 850135528 4.91 0.6 28.21 319.21 0.36 0.14 0.5 0 noise 6.18 69.04 0 -0.06 0.25

951765478 17.4047 30 6.52 0.07 17.4 2.4 0.78 1.41 131.76 314 1.8 304 850135532 0.57 0.97 18.69 170.53 0.01 nan 0.02 0.03 good nan 48.68 0.07 nan 0.61

951765485 13.9773 40 1.72 0.04 13.98 2.52 0.8 1.54 167.8 315 1.97 305 850135532 0.87 0.99 37.84 276.76 0.03 nan 0.5 0.03 good nan 46.45 0.07 nan 0.13

951765490 5.27323 20 nan 0.16 5.27 5.02 0.97 0.18 276.16 316 1.62 306 850135528 9.85 0.69 34.6 372.84 0.26 0.19 0.09 0 noise -0.69 59.62 0 -0.13 0.03

951765497 8.33011 30 1.37 0.02 8.33 1.85 0.42 1.58 129.34 317 1.72 307 850135532 0.56 0.99 70.69 594.95 0.02 nan 0.43 0.03 noise -15.8 34.59 0.09 nan 0.5

951765502 13.287 60 3.88 0.02 13.29 1.94 0.7 0.88 74.88 318 0.9 308 850135532 0.28 0.99 19.79 390.99 0.03 nan 0.41 0.05 noise -2.97 46 0.07 -0.01 0.26

951765508 2.13461 50 -0.21 0.05 2.13 4.76 0.89 0.74 123.99 319 1.73 309 850135532 0.16 0.99 65.81 206.29 0.35 0.43 0.01 0 good 2.75 49.17 0.01 -0.01 0.03

951765513 3.90661 20 -0.69 0.08 3.91 5.43 0.95 0.73 92.38 320 2.58 310 850135534 5.24 0.85 60.19 556.47 0.23 0.1 0.16 0 noise nan 63.22 0 -0.01 0.02

951765520 24.5397 150 3.15 0.01 24.54 nan nan 1.22 40.54 322 0.23 311 850135538 1.26 0.99 56.65 489.06 0 nan 0.5 0 noise 1 nan nan -0 0.23

951765525 12.0324 160 -2.46 0.03 12.03 2.12 0.49 1.04 43.27 323 0.21 312 850135538 1.34 0.87 49.35 407.97 0.02 nan 0.5 0.04 noise -0.48 45.74 0.05 nan 0.41

951765530 2.95127 80 0.14 0.1 2.95 4.1 0.87 0.65 96.61 324 1.98 313 850135540 0.86 0.99 57.93 449.71 0.21 0.21 0 0 good 0.76 42.12 0.02 -0.03 0.18

951765535 3.3674 40 nan 0.1 3.37 3.79 0.88 0.63 102.58 325 2.06 314 850135540 0.85 0.99 35.02 174.93 0.3 0.19 0.04 0.01 good 0.34 49.14 0.01 -0.04 0.16

951765541 1.58972 70 -0.34 0.08 1.59 3.35 0.62 1.26 84.77 326 0.47 315 850135538 1.76 0.99 39.98 297.02 0.02 nan 0.06 0 good 0.62 34.71 0.03 -0.03 0.41

951765547 3.5935 70 0 0.12 3.59 2.37 0.55 0.26 111.7 327 2.55 316 850135542 0.44 0.99 43.52 167.28 0.49 0.18 0.02 0 good 0.12 37 0.05 -0.09 0.08

951765552 2.32971 100 -0.55 0.08 2.33 3.21 0.69 0.67 80.97 328 2.02 317 850135542 0.29 0.99 37.02 291.49 0.23 0.23 0.35 0.01 good 2.06 43.01 0.02 -0.02 0.51

951765557 4.0796 100 0 0.1 4.08 4.25 0.88 0.98 79.39 329 0.47 318 850135538 1.95 0.99 47.98 203.49 0.06 nan 0.5 0.01 good 0.26 62.88 0 -0 0.13

951765561 1.76766 60 0.69 0.06 1.77 4.46 0.93 0.67 106.44 330 1.84 319 850135546 0.01 0.94 48.54 106.04 0.29 0.32 0.02 0 good 1.3 43.06 0.01 -0.04 7.59

951765566 3.18388 40 0.34 0.08 3.18 3.57 0.8 0.66 139.12 331 2.36 320 850135548 0.01 0.96 39.89 127.15 0.38 0.3 0.02 0 good 2.06 47.13 0.02 -0.04 0.13

951765571 2.77426 70 0.14 0.12 2.77 4.01 0.82 0.59 136.85 332 2.68 321 850135550 0.98 0.99 39.4 257.24 0.38 0.15 0.01 0 good 1.79 43.14 0.02 -0.06 0.32

951765576 12.7688 20 nan 0.13 12.77 2.84 0.88 0.3 154.56 333 3.07 322 850135550 0.83 0.99 56.81 110.93 0.63 0.12 0.06 0.02 noise -0.69 56.65 0.03 -0.11 0.04

951765582 14.778 60 0 0.05 14.78 3.51 0.86 0.22 170.34 334 3.49 323 850135550 0.91 0.99 52.15 105.33 0.74 0.1 0.01 0.02 good 0.21 59.1 0.03 -0.19 0.05

951765587 2.62793 120 0 0.11 2.63 4.05 0.95 0.65 78.83 335 2.14 324 850135552 0.18 0.99 18.69 192.15 0.3 0.16 0.06 0 good 1.48 55.83 0 -0.04 0.13

951765594 2.76868 140 -0.14 0.09 2.77 3.01 0.75 0.41 72.44 336 1.33 325 850135548 0.14 0.99 50.71 305.21 0.27 0.37 0.5 0 good 0.45 43.45 0.02 -0.03 0.82

951765600 0.00796 170 0.37 nan 0.01 3.56 nan 1.24 107.16 337 1.02 326 850135558 4.81 0.52 nan 0 0.08 nan 0.5 0 noise 0.02 35.83 0 -0.02 0

951765606 7.46973 50 0 0.1 7.47 4.84 0.96 1.35 127.65 338 1.56 327 850135564 6.27 0.99 30.61 151.59 0.05 nan 0.03 0 good 1.03 75.46 0 -0.01 0.2

951765611 23.9673 60 0 0.13 23.97 3.71 0.98 0.73 168.07 339 1.96 328 850135566 0 0.99 58.3 152.94 0.34 0.41 0 0.01 good 0.82 117.66 0 -0.05 0.01

951765617 2.60169 80 0.34 0.1 2.6 2.42 0.64 0.62 113.92 340 1.38 329 850135566 0.17 0.99 37.35 442.26 0.3 0.38 0.33 0 good 1.24 43.65 0.02 -0.04 0.26

951765623 6.50075 90 0.27 0.12 6.5 3.56 0.95 0.7 107.6 341 2.27 330 850135568 0.1 0.99 42.9 184.27 0.34 0.21 0.01 0 good 1.57 66.4 0 -0.02 0.07

951765629 3.00129 60 1.37 0.25 3 4.96 0.98 0.6 379.77 342 5.43 331 850135570 0.44 0.97 30.83 97.69 1.08 0.16 0 0 good 0.82 65.17 0 -0.14 0

951765635 0.11336 50 4.12 nan 0.11 3.8 0.19 0.89 162.18 343 1.75 332 850135570 0.62 0.73 26.07 172.34 0.39 0.41 0.1 0 noise 1.17 32.51 0.01 -0.04 0

951765641 4.34527 80 0 0.12 4.35 3.23 0.71 0.6 165.25 344 2.7 333 850135572 0.63 0.99 26.61 259.8 0.45 0.19 0.15 0 good 0.61 41.47 0.03 -0.05 0.26

951765647 9.12497 90 0.69 0.18 9.12 4.87 0.94 1.43 197.3 345 2.46 334 850135574 6.69 0.99 34.73 114.37 0.08 nan 0.01 0 good 1.52 65.29 0.01 -0.01 0.01

951765653 9.42195 70 0 0.07 9.42 3.89 0.99 0.81 111.19 346 1.81 335 850135576 0.42 0.99 45.01 115.34 0.34 0.15 0.11 0 good 3.09 78.11 0 -0.03 0.01

951765661 15.6626 110 0.62 0.15 15.66 4.43 0.97 0.56 178.05 347 1.99 336 850135578 0.47 0.99 59.73 102.87 0.43 0.26 0 0 good 0.34 72.23 0.01 -0.09 0.02

951765666 9.72245 100 -6.32 0 9.72 1.84 0.58 0.27 71.6 348 1.09 337 850135576 0.78 0.99 80.13 259.35 0.32 0.14 0.24 0.01 good -0.2 39.77 0.07 -0.03 0.33

951765669 5.41325 80 0.21 0.1 5.41 2.49 0.75 0.66 128.98 349 2.77 338 850135580 0.32 0.99 64.93 158.97 0.41 0.21 0.06 0 good 0.82 39.66 0.04 -0.05 0.1

951765675 18.6652 90 -0.41 0.04 18.67 3.91 0.94 0.21 119.65 350 2.52 339 850135580 0.52 0.99 59.94 172.66 0.51 0.12 0.18 0.02 good -0.31 78.77 0.01 -0.15 0.03

951765681 14.6917 130 0.82 0.07 14.69 2.77 0.88 0.55 97.06 351 2.24 340 850135580 0.39 0.99 57.46 140.99 0.26 0.26 0.5 0.03 good 0.94 59.98 0.02 -0.03 0.09

951765686 11.2814 130 0.27 0.08 11.28 3.25 0.98 0.56 121.6 352 2.36 341 850135582 0.47 0.99 51.64 151.62 0.3 0.26 0.27 0.01 good 0.66 73.08 0 -0.03 0.07

951765692 1.13855 80 -0.07 0.02 1.14 2.92 0.83 0.29 114.09 353 2.15 342 850135582 0.37 0.99 48.63 391.37 0.48 0.12 0.09 0 good 0.48 47.08 0 -0.06 0.09

951765697 9.08022 80 0.69 0.09 9.08 4.04 0.99 0.6 159.88 354 2.32 343 850135584 0.54 0.99 61.19 153.43 0.44 0.26 0 0 good 0.48 63.08 0 -0.1 0

951765703 3.58978 150 0.71 0.07 3.59 1.84 0.58 0.52 108.43 355 1.22 344 850135578 0.31 0.99 44.96 360.89 0.29 0.33 0.5 0 noise 0.58 35.07 0.08 -0.03 0.61

951765710 0.003 170 -1.48 nan 0 4 nan 0.76 95.93 356 1.34 345 850135584 0.25 0.23 nan 0 0.35 0.21 0.26 0 noise 1.69 3.11185e+12 nan -0.02 0

951765716 3.34756 140 0.56 0.08 3.35 2.67 0.67 0.51 96.11 357 1.55 346 850135590 0.3 0.99 47.02 324.67 0.25 0.3 0.5 0 good 0.56 39.58 0.04 -0.04 0.64

951765721 10.0994 80 -0.62 0.07 10.1 2.81 0.88 1.62 117.72 358 1.98 347 850135588 1.96 0.99 50.42 142.8 0.11 0.66 0.1 0.01 good 0.21 48.86 0.03 -0.01 0.06

951765727 15.782 140 0.37 0.1 15.78 3.97 0.93 1.51 181.62 359 3.07 348 850135588 1.1 0.99 60.27 112.92 0.09 0.76 0.01 0.01 good 1.05 56.55 0.02 -0.03 0.05

951765732 1.64645 60 -0.21 0.05 1.65 4.62 0.97 0.34 197.37 360 2.73 349 850135590 0.54 0.99 40.77 141.11 0.75 0.27 0 0 good -0.69 46.89 0.01 -0.17 0

951765737 6.97155 100 0.57 0.1 6.97 2.8 0.87 1.29 89.66 361 0.7 350 850135596 7.77 0.99 46.35 252.08 0.01 nan 0.03 0 good 2.75 50.71 0.01 nan 0.06

951765743 10.5288 110 0.86 0.04 10.53 2.1 0.3 1 58.02 362 0.32 351 850135598 1.42 0.99 75.73 626.69 0.03 nan 0.35 0.06 noise -2.02 35.64 0.11 -0.01 0.12

951765747 13.0858 130 0.87 0.01 13.09 2.04 0.34 1.18 56.22 363 0.34 352 850135598 1.44 0.99 31.45 446.75 0.01 nan 0.14 0.07 noise -1.47 37.97 0.11 -0.02 0.02

951765753 13.4251 130 -0.06 0 13.43 1.61 0.32 1.07 48.75 364 0.3 353 850135598 1.29 0.99 30.03 436.84 0.05 nan 0.09 0.11 noise -1.35 37.3 0.18 -0.02 0.05

951765758 13.2055 110 1.97 0.01 13.21 1.41 0.34 2.16 46.52 365 0.32 354 850135598 1.3 0.99 41.31 525.2 0.01 nan 0.05 0.08 noise -3.41 38.02 0.17 -0.61 0.02

951765764 19.5368 100 -0.35 0.06 19.54 4.1 0.97 1.02 58.84 366 0.33 355 850135598 1.43 0.99 31.8 348.5 0.02 nan 0.04 0.01 noise 3.84 63.32 0.02 nan 0.04

951765769 7.64943 60 0 0.13 7.65 3.92 0.97 1.36 128.07 367 0.73 356 850135598 2.81 0.99 60.32 199.26 0.07 nan 0.06 0 good 1.1 53.98 0.01 -0.01 0.11

951765775 0.31001 90 -0.41 0.04 0.31 4.1 0.68 0.62 111.08 368 1.83 357 850135602 0.29 0.86 27.56 111.46 0.35 0.19 0.32 0 noise 0.41 47.54 0 -0.05 4.84

951765780 0.00289 170 1.19 nan 0 4.64 nan 0.47 134.75 369 2.14 358 850135602 0.06 0.19 nan 0 0.49 0.21 0.46 0 noise 0 1.47191e+13 nan -0.04 0

951765786 2.58722 110 0.51 0.07 2.59 3.7 0.81 0.69 83.95 370 1.46 359 850135604 0.02 0.99 45.43 284.91 0.18 0.33 0.3 0 good 0.96 51.12 0.01 -0.01 0.27

951765793 7.54827 70 -0.34 0.07 7.55 6.74 1 0.33 210.46 371 2.55 360 850135612 0.12 0.99 52.64 78.99 0.8 0.26 0 0 good 1.37 80.65 0 -0.23 0

951765801 1.88846 80 0.21 0.05 1.89 3.46 0.66 0.67 138.63 372 1.58 361 850135606 0.16 0.99 37.74 303.18 0.54 0.22 0.46 0 good 0.62 36.46 0.06 -0.04 4.76

951765809 5.72387 130 0.18 0.06 5.72 3.89 0.93 0.19 119.03 373 1.74 362 850135602 0.23 0.99 75.95 241.1 0.49 0.15 0.5 0.01 good 0.03 48.86 0.03 -0.15 3.31

951765815 0.00713 60 -0.34 nan 0.01 5.91 nan 0.51 140.89 374 2.38 363 850135602 0.12 0.3 nan 0 0.49 0.18 0.46 0 noise 0.41 50.12 0 -0.03 0

951765820 5.49643 70 -0.69 0.1 5.5 6.31 1 0.25 233 375 3.01 364 850135610 0.12 0.99 57.86 95.94 1.07 0.19 0 0 good 0 73.48 0 -0.24 0.04

951765825 1.98983 150 0.05 0.08 1.99 2.11 0.61 0.58 65.32 376 0.95 365 850135612 0.44 0.99 115.45 645.08 0.17 1.07 0.5 0 good 1.15 30.19 0.16 -0.02 1.46

951765832 5.27602 170 0.93 0.01 5.28 1.45 0.78 1.19 32.95 377 0.71 366 850135624 0.12 0.99 42.6 568.58 0.07 0.58 0.5 0.13 noise 1.03 76.64 0.08 -0.02 1.1

951765836 1.17183 80 0.16 0.05 1.17 4.02 0.89 0.89 99.7 378 2.26 367 850135622 0.18 0.99 28.64 370.27 0.34 0.16 0.06 0.01 noise 1.37 45.85 0.05 -0.02 0.71

951765841 0.00858 150 -0.26 nan 0.01 4.76 0.5 0.52 137.58 379 1.73 368 850135614 0 0.27 2.38 0 0.39 0.23 0.5 0 noise 0.25 51.78 0 -0.02 0

951765849 1.28302 120 0.13 0.03 1.28 2.99 0.62 0.45 84.34 380 1.58 369 850135622 0.16 0.99 24.27 169.28 0.26 0.26 0.5 0.02 noise 0.22 38.21 0.11 -0.03 7.27

951765855 1.28787 110 0.15 0.05 1.29 3.15 0.65 0.77 86.98 381 1.81 370 850135628 0.37 0.99 35.92 482.31 0.26 0.23 0.5 0.02 good 1.72 38.44 0.1 -0.02 2.97

951765859 0.18828 110 0.23 0.04 0.19 3.26 0.41 0.62 87.59 382 1.91 371 850135628 0.39 0.85 25.33 136.03 0.24 0.27 0.5 0.01 noise 1.03 37.93 0.03 -0.01 8.74

951765866 3.43602 80 -0.82 0.08 3.44 3.25 0.82 0.27 85.98 383 1.64 372 850135630 0.17 0.99 53.48 306.8 0.39 0.23 0.31 0.04 good -0.14 71.39 0.07 -0.05 0.57

951765870 0.45974 70 0.48 0.06 0.46 3.05 0.81 0.6 94.19 384 1.99 373 850135636 0.69 0.99 23.73 292.94 0.29 0.21 0.47 0 noise 1.37 39.56 0.04 -0.03 5.86

951765876 0.00134 140 0.38 nan 0 2.89 nan 0.47 151.56 385 2.26 374 850135636 0.38 0.12 nan 0 0.49 0.19 0.5 0 noise 0.24 4.50625e+13 nan -0.07 0

951765881 1.33448 130 0.86 0.02 1.33 2.35 0.81 0.98 59.13 386 1.2 375 850135642 8.45 0.98 21.34 283.81 0.03 nan 0.5 0.02 good 0 48.82 0.06 nan 2

951765886 3.61417 50 -0.41 0.07 3.61 3.13 0.97 1.77 97.81 387 1.71 376 850135642 2.6 0.99 30.06 150.26 0.07 1.35 0.02 0.08 good 0.69 76.4 0.04 nan 0.03

951765892 0.73668 40 1.37 0.14 0.74 4.11 0.91 0.78 101.6 388 2.47 377 850135648 0.12 0.95 36.65 292.55 0.28 0.29 0.11 0 good 2.06 53.39 0.02 -0.01 0.86

951765898 21.7077 90 0.01 -0.01 21.71 2.49 0.94 1.4 24.14 389 0.33 378 850135690 0.47 0.99 54.46 644.79 0.01 1.55 0.5 0.06 noise 28.16 672.32 0.13 -0.01 0.3

951765904 0.91411 110 0.35 0.24 0.91 3.3 0.58 0.66 92.66 390 1.54 379 850135684 0.17 0.97 31.69 286.94 0.11 1 0 0.01 noise 0 20.57 0.14 -0.09 18.91

951765910 10.1545 100 0.32 0.11 10.15 2.04 0.87 0.66 34.58 391 0.57 380 850135690 0.17 0.99 38.31 719.8 0.05 1 0.5 0.06 noise 0 40.16 0.15 -0.01 3.04

951765913 10.8102 50 -0.34 0.01 10.81 2.4 0.52 0.49 281.45 392 3.11 381 850135072 0.38 0.99 32.7 83.33 0.9 0.22 0.01 0.02 good 0 41.89 0.04 -0.13 0.07

951765921 0.11367 80 -0.41 nan 0.11 3.22 0 0.54 206.54 393 1.81 382 850135072 0.42 0.7 14.76 48.83 0.55 0.32 0.37 0 good 0.21 23.91 0.02 -0.07 0

951765926 9.27046 70 -0.69 0.12 9.27 5.24 1 1.26 136.58 394 1.74 383 850135112 1.29 0.99 13.75 152.34 0.06 0.91 0.02 0 good 0.48 84.83 0 -0 0.04

951765931 5.00807 80 -0.69 0.07 5.01 3.88 0.83 1.25 135.64 395 1.24 384 850135118 7.23 0.99 25.1 236.37 0.04 nan 0.12 0 good -0.24 51.48 0.01 -0.01 0.14

951765944 1.90417 60 0 0.05 1.9 3.76 0.36 0.48 245.52 396 2.87 385 850135172 0.35 0.99 36.49 188.18 0.8 0.23 0 0 good 0.69 31.49 0.02 -0.16 0.15

951765950 1.62072 50 -0.69 0.04 1.62 4.09 0.32 1.35 215.39 397 1.66 386 850135174 4.38 0.99 15.78 218.98 0.16 nan 0.05 0 good 1.37 33.75 0.01 -0.02 0.09

951765955 0.16513 60 nan nan 0.17 6.15 0.89 1.59 223.68 398 2.38 387 850135324 2.03 0.89 30.02 101.26 0.02 2.62 0 0 good 1.59 47.02 0 -0.02 0

951765961 0.948 90 1.17 0.04 0.95 2.65 0.69 0.81 75.69 399 1.65 388 850135334 0.16 0.99 32.18 380.84 0.22 0.22 0.14 0.01 good 1.69 45.56 0.01 -0.03 0.86

951765966 0.10117 50 0.34 nan 0.1 2.21 0.1 0.66 122.14 400 1.66 389 850135374 0.03 0.97 23.95 78.86 0.46 0.18 0.21 0 good 0.34 25.36 0.05 -0.03 11.35

951765973 0.67416 70 0.69 0.05 0.67 3.58 0.6 0.74 115.43 401 1.77 390 850135372 0.39 0.99 47.96 420 0.42 0.14 0.07 0 good 1.72 34.58 0.09 -0.04 4.01

951765977 0.50407 80 1.03 0 0.5 3.88 0.62 0.6 63.06 402 1.21 391 850135380 0.15 0.99 49.52 637.74 0.21 0.19 0.12 0.01 good 1.51 46.33 0.01 -0.02 2.59

951765984 0.12969 160 -1.57 0.15 0.13 3.06 0.37 1.39 119.14 403 0.88 392 850135448 0.24 0.89 58.47 127.63 0.03 1.03 0.07 0 noise 2.37 16.57 0.4 -0.03 211.87

951765988 0.45085 50 -3.43 0.01 0.45 4.31 0.85 1.37 152.06 404 0.62 393 850135470 0.11 0.74 20.4 19.72 0.14 0.33 0.12 0 good -0.48 45.49 0 -0.01 0

951765995 1.32508 70 0.48 0.02 1.33 3.83 0.74 0.73 136.78 405 2.7 394 850135604 0.09 0.99 42.33 215.45 0.44 0.21 0.01 0 good 1.37 44 0.01 -0.04 0.49

951766001 0.1395 110 0.29 nan 0.14 3.13 0.77 0.59 169.88 406 2.17 395 850135684 0.16 0.92 25.78 49.92 0.25 0.78 0.01 0 noise 0 13.63 0.3 -0.16 87.57

951766007 0.57589 60 0 0.02 0.58 3.66 0.76 0.54 123.99 407 1.32 396 850135330 0.1 0.99 21.32 269.27 0.24 0.26 0.03 0 good 0.76 47.54 0 -0.03 0.23

951766012 0.09083 50 -0.96 -0.12 0.09 4.32 0 1.11 312.1 408 1.33 397 850135474 0.2 0.52 6.35 11.14 0.3 0.36 0.08 0 good -2.75 18.14 0.02 -0.01 0

951766019 0.02501 40 0 nan 0.03 10.73 0.76 0.66 494.69 409 5.05 398 850135372 0.21 0.6 nan 0 1.98 0.12 0.04 0 good 0 86.41 0 -0.17 0

951766024 0.00837 30 0.69 nan 0.01 6.81 0 0.58 607.06 410 7.22 399 850135372 0.21 0.41 nan 0 2.14 0.1 0 0 good 0.69 86.57 0 -0.16 0

951766138 3.56684 30 nan nan 3.57 2.03 0.5 0.56 46.73 0 1.68 0 850137692 0.75 0.99 22.36 402.05 0.27 0.27 0.5 0.01 good 0.69 21.36 0.05 -0.04 0.05

951766144 10.9933 30 nan 0.09 10.99 5.03 0.94 0.49 170.97 1 4.11 1 850137694 0.73 0.99 9.47 141.83 0.52 0.4 0 0.01 good 1.03 69.52 0.01 -0.11 0

951766152 7.44338 30 nan 0.07 7.44 2.72 0.8 0.56 97.33 2 2.47 2 850137694 0.61 0.99 13.98 293.31 0.27 0.25 0.5 0.01 good 0 43.3 0.01 -0.05 0.23

951766160 4.75634 40 nan 0.1 4.76 2.63 0.71 0.54 108.12 3 2.6 3 850137694 0.52 0.99 35.05 247.18 0.31 0.22 0.07 0.01 good 0.82 31.66 0.02 -0.04 0.16

951766167 10.7141 30 nan 0.24 10.71 4.63 0.99 0.54 120.07 4 2.76 4 850137696 0.53 0.99 10.94 253.75 0.32 0.25 0.01 0 good 0.69 95.02 0 -0.04 0.02

951766173 9.36047 30 nan 0.36 9.36 6.64 1 0.54 266.39 5 5.07 5 850137696 0.46 0.99 18.53 56.64 0.87 0.23 0 0 good 0.34 80.08 0 -0.12 0

951766180 10.412 40 nan 0.18 10.41 5.04 1 0.58 162.85 6 3.28 6 850137692 0.49 0.99 13.94 93.89 0.67 0.18 0 0 good -0.41 90.48 0 -0.1 0.01

951766188 5.87175 60 -0.69 0.12 5.87 1.96 0.86 0.58 96.41 7 2.41 7 850137698 0.58 0.99 20.3 264.18 0.24 0.27 0.5 0.01 good 0.55 40.56 0.01 -0.04 0.13

951766195 8.16663 40 nan 0.08 8.17 3.18 0.95 0.56 122.78 8 2.7 8 850137696 0.52 0.99 14.95 182.92 0.36 0.22 0 0 good 0 62.59 0 -0.05 0.11

951766203 1.90221 50 -0.34 0.51 1.9 6.8 0.98 0.52 227.15 9 5.32 9 850137702 0.71 0.99 17.68 67.62 0.7 0.43 0 0 good 0.69 80.87 0 -0.15 0

951766208 12.5293 60 -0.34 0.13 12.53 3.63 0.97 0.56 90.46 10 2.7 10 850137702 0.51 0.99 21.4 191.77 0.24 0.25 0.08 0 good 0.21 61.47 0.01 -0.05 0.22

951766214 0.0589 60 0 nan 0.06 5.75 0 0.55 172.69 11 3.83 11 850137702 0.87 0.55 17.26 23.89 0.51 0.67 0.09 0 noise 0.48 34.46 0 -0.1 11.16

951766220 0.17577 60 nan nan 0.18 2.4 0 0.59 76.38 12 1.41 12 850137696 0.8 0.99 37.98 194.45 0.18 0.34 0.3 0 good 0.37 28.48 0.02 -0.03 0

951766225 5.32087 50 0.69 0.42 5.32 6.1 1 0.55 168.69 13 3.98 13 850137700 0.71 0.99 33.84 69.2 0.48 0.33 0 0 good 0.41 61.97 0 -0.1 0

951766229 4.65135 60 -0.34 0.31 4.65 6.3 0.97 0.52 125.53 14 3.9 14 850137704 0.41 0.99 14.89 89.04 0.4 0.18 0 0 good 0.48 88.58 0 -0.06 0.04

951766235 0.96609 60 -2.06 0.21 0.97 6.33 0.99 0.55 56.42 15 1.71 15 850137704 3.26 0.76 50.53 593.52 0.21 0.21 0.5 0 good 0.41 113.93 0 -0.01 0

951766240 5.05385 80 0 0.1 5.05 2.98 0.74 0.58 69.18 16 2.12 16 850137702 0.54 0.99 19.23 196 0.18 0.26 0.38 0 good 1.47 51.45 0.01 -0.04 0.1

951766245 3.38342 70 0 0.19 3.38 7.22 0.97 0.58 130.8 17 3.98 17 850137706 0.39 0.99 21.13 85.17 0.4 0.19 0 0 good 0.55 74.94 0 -0.06 0.03

951766251 6.44888 80 0 0.11 6.45 2.89 0.88 0.6 64.94 18 2.3 18 850137706 0.47 0.99 18.91 203.71 0.14 0.34 0.5 0.01 good -0.55 57.77 0.01 -0.03 0.07

951766256 0.70733 80 -0.21 0.04 0.71 2.75 0.28 0.54 48.97 19 1.6 19 850137706 0.42 0.99 30.38 616.5 0.14 0.23 0.33 0 good 0 36.52 0.01 -0.03 0.08

951766262 6.33583 80 -0.27 0.09 6.34 4.65 0.92 0.47 89.61 20 2.99 20 850137710 0.65 0.99 21.62 101.34 0.28 0.27 0 0 good 0.41 57.29 0.01 -0.05 0.05

951766266 8.58318 80 0.14 0.15 8.58 4.64 0.88 0.59 74.93 21 2.61 21 850137710 0.55 0.99 17.37 130.59 0.2 0.25 0.09 0 good 0.62 69.35 0 -0.03 0.09

951766271 6.02665 90 -0.34 0.05 6.03 3.36 0.85 0.59 65.11 22 2.3 22 850137710 0.58 0.99 17.03 146.86 0.18 0.25 0.5 0 good 0.96 56.91 0.01 -0.04 0.17

951766276 2.62132 90 -0.34 0.15 2.62 3.57 0.76 0.66 62.41 23 1.7 23 850137712 0.74 0.99 21.51 385.77 0.12 0.34 0.5 0 good -0.34 52.6 0 -0.03 0.12

951766280 4.53841 70 -1.24 0.13 4.54 3.43 0.95 0.67 109.6 24 2.72 24 850137712 0.51 0.99 27.75 122.79 0.31 0.27 0.02 0 good 0.41 61.25 0 -0.05 0.07

951766286 6.32157 50 -1.37 0.21 6.32 4.1 0.98 0.59 172.64 25 3.88 25 850137712 0.5 0.99 20.87 39.08 0.49 0.23 0 0 good 1.03 88.39 0 -0.07 0

951766291 2.9985 70 -0.69 0.11 3 2.97 0.74 0.59 73.1 26 2.27 26 850137712 0.55 0.99 33.54 270.2 0.2 0.22 0.21 0 good 0.14 55.24 0 -0.03 0.03

951766298 8.71307 80 0 0.16 8.71 4.07 0.99 0.55 90.75 27 2.61 27 850137714 0.48 0.99 19.79 119.99 0.25 0.3 0 0 good 1.1 94.31 0 -0.04 0.03

951766303 9.10947 60 -0.62 0.16 9.11 4.16 0.98 0.66 146.33 28 3.85 28 850137716 0.63 0.93 25.55 129.01 0.38 0.27 0.02 0 good 0.34 84.8 0 -0.06 0.05

951766309 27.6031 50 -1.72 0.07 27.6 5.72 1 0.84 132.06 29 2.54 29 850137718 0.35 0.99 8.98 49.88 0.25 0.34 0 0 good 0.69 117.47 0 -0.02 0

951766314 13.9623 80 -0.48 0.08 13.96 4.4 0.99 0.65 133.62 30 3.22 30 850137718 0.58 0.99 15.56 74.63 0.25 0.34 0.01 0 good 0.89 101.42 0 -0.08 0.02

951766322 13.2362 70 -0.69 0.19 13.24 6.17 1 0.62 171.41 32 4.1 31 850137718 0.51 0.99 26.18 58.06 0.39 0.29 0 0 good 1.3 113.45 0 -0.09 0.01

951766327 20.8808 50 -0.69 0.17 20.88 3.34 0.98 0.62 77.33 33 2 32 850137720 0.21 0.99 6.15 59.93 0.23 0.22 0.37 0.01 good 0.69 117.81 0 -0 0.03

951766334 9.46226 80 -0.34 0.18 9.46 5.24 0.98 0.59 161.36 34 4.05 33 850137716 0.56 0.99 33.4 71.71 0.43 0.27 0 0 good 1.37 69.3 0 -0.08 0.01

951766340 3.49089 60 -0.21 0.15 3.49 3.38 0.75 0.62 154.86 35 3.83 34 850137720 0.58 0.99 13.15 79.16 0.38 0.3 0.07 0 good 1.03 35.08 0.03 -0.07 0.18

951766346 7.1218 70 -0.34 0.05 7.12 2.46 0.76 0.65 88.55 36 2.31 35 850137720 0.48 0.99 25.61 131.8 0.23 0.27 0.22 0.01 good 1.03 50.06 0.01 -0.03 1.47